Free Speech in the Era of Its Technological Amplification

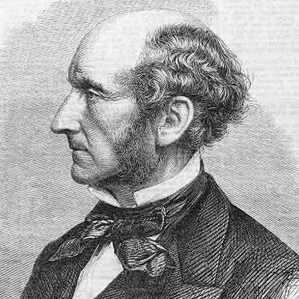

Greetings, Pale Ghost. I don’t know what news reaches you in the afterlife—whether there is a gossipy daily bulletin, the Heavenly Gazette, filled with our doings; or if new arrivals bring stories of developments on Earth; or if you still care about us at all—but much has changed since you died in 1873. Some of those changes would gratify your liberal spirit; still others, vex. A few would baffle.

Adult suffrage is universal in democratic countries: women, for whose rights you campaigned so assiduously, may vote in Great Britain, the United States, Europe, Latin America, and much of Asia, including India. (The last should especially please the former chief examiner of correspondence at India House.) On the other hand, socialism enjoyed only a fleeting historical success in a few countries, because it contradicted the liberal principles you championed. No one solved what in your Autobiography you called “the social problem of the future”: “how to unite the greatest liberty of individual action, with a common ownership of the raw material of the globe, with an equal participation of all in the benefits of combined labour.”

The most sweeping changes have been technological. Less than one hundred years after you died, engineers constructed an “Electronic Numerical Integrator and Computer,” heir to the “analytical engine” that your contemporary Charles Babbage planned but could not build, which could be programmed for general purposes, as Ada Lovelace had hoped. Billions of these so-called computers now exist—in homes, on desks, in walls, embedded into the very stuff of life—and they are connected in a planetary network, called the Internet, similar to the telegraph. We use them to communicate, write, and calculate, and to consult an immaterial library housing most of humanity’s knowledge. It’s hard to describe.

I’m sorry to say that the history of your own reputation has been mixed. For decades, your System of Logic (1843) and Principles of Political Economy (1848) were the standard texts. As late as the 1980s, when I was up at Oxford, they were still assigned. However, both fields have been formalized in ways you could not anticipate, and your books have been utterly superseded. But your lucid little book On Liberty (1859) has endured, as you predicted: “The Liberty is likely to survive longer than anything else that I have written, because … it [is] a kind of philosophic text-book of a single truth.”

That truth, now so famous that it is simply called “Mill’s harm principle,” is worth quoting in full. I have in front of me the broken paperback copy of On Liberty that I first read at boarding school.

The sole end for which mankind are warranted, individually or collectively, in interfering with the liberty of action of any of their number is self-protection … The only purpose for which power can be rightfully exercised over any member of a civilized community, against his will, is to prevent harm to others. His own good, either physical or moral, is not a sufficient warrant. He cannot rightfully be compelled to do or forbear because it will be better for him to do so, because it will make him happier, because, in the opinions of others, to do so would be wise or even right … Over himself, over his own body and mind, the individual is sovereign.

Your harm principle has ever since guided open societies in the regulation of what may be said, written, or shown—what is commonly called freedom of speech, the subject of my letter to you. For although the First Amendment to the U.S. Constitution commands that “Congress shall make no law … abridging the freedom of speech, or of the press,” and the U.N.’s Universal Declaration of Human Rights (1948) states, “Everyone has the right to freedom of expression,” all thoughtful people know that the freedom is qualified. Free speech is a contingent right that butts up against other rights and mores, some explicitly protected by laws, others implicitly understood, still others not yet established but possessing their advocates. Yet while this has been long recognized, the arduous business of explicitly defining the limits of what may be expressed is a relatively recent activity, and it owes everything to On Liberty. In the United States, since the Supreme Court decided Shenck v. United States (1919), the government has had to prove that any speech it seeks to limit would present a “clear and present danger.” (Oliver Wendell Holmes, writing the unanimous opinion, gave the famous example of a person falsely shouting “Fire!” in a crowded theater.) Subsequently, the court has elaborated that test to protect advocacy of illegal action up to the point where a serious and imminent crime is “likely” to occur. (The 1969 ruling in Brandenburg v. Ohio allowed Clarence Brandenburg, a Ku Klux Klan leader, to call at a rally for “revengeance” against “niggers” and “Jews” but not, by implication, to instruct his followers to lynch Mr. Washington at 33 Linden Street.)

Free speech is not accepted as a universal right. China bans anything subversive of the Communist Party’s rule.

The court has defended defamatory speech, “fighting words,” and indecency. Not only in America, but in all nations committed to protecting the right, the standard for freedom of speech has become presumptively absolutist. Everyone presumes they may say what they like without penalty, unless censors can show that questionable speech would irremediably and immediately harm someone else.

Your principle, in the jargon of engineers, “scaled” as new technologies and historical circumstances appeared and the globe became crowded with new people. Critics had noted that you never clearly defined “harm” but seemed to mean physical harm. That was clearly insufficient to practical purposes, and “harm” was everywhere expanded to include commercial damage, which is why copyright law sharply circumscribes what may be quoted or sampled without permission of the author or publisher. But your single truth has held. That is, until now.

The Sunny Compromise

Three recent events call into question whether your principle will continue to scale in the future. Some of the terms and concepts I use will be unfamiliar. I understand Steve and Aaron have joined you in the fields of gold. Ask them to explain.

The companies that own the most popular sites on the Internet are incorporated in the United States, which enjoys the most expansive protections for free speech as well as the narrowest limits. Legally bound by the limits but not by the protections of the First Amendment and its case law, they have nevertheless followed the American standard in the terms of service they impose on their users. They have encouraged free speech where it is consistent with their businesses, and limited expressions illegal in the United States, such as lewd photographs of a child, which are evidence of a crime. More, the companies were founded in California’s Silicon Valley, whose political culture can seem perplexingly libertarian even to other Americans. (The chief executive of one, Twitter, has called his company “the free speech wing of the free speech party.”) But as the technologies created by these companies have come to touch nearly everyone who lives, their peculiar understanding of free speech has collided with different notions of what forms of expression are legal or proper.

The problem is that “harm” has been variously understood; there is no common definition. Democratic nations not part of what used to be called the Anglo-Saxon tradition have interpreted the word more broadly than Great Britain, the United States, Canada, Australia, or New Zealand. Because of two great wars and the murder of six million Jews by the German state, much of Europe outlaws political speech associated with revanchist nationalist parties, as well as expressions of hatred for the Jewish people. In Scandinavia, many kinds of hate speech are illegal because they are thought harmful to individual dignity. Even in the Anglophone countries, a narrow definition of harm emphasizing physical or commercial damage is not universally accepted: since the 1970s, some feminists have argued that pornography is a kind of violence against women. Further, free speech is by no means accepted as a universal right. China, the last great tyranny on Earth, absolutely bans anything subversive of the Communist Party’s rule. Thai law will not permit criticism of the king. Wherever Sharia law is the foundation of a nation’s legal code (and also in many countries, like India, where there is a very large Muslim population), depictions of the Prophet Muhammad are illegal, because Muslims believe such representation is blasphemous.

To all these, American Internet companies have proposed a sunny compromise. To governments whose understanding of free speech departs from the American standard, they have promised: we will comply with local laws. To communities convinced that hateful expression is harmful, they’ve said: we will censor hate speech. The compromise is a hack designed by Silicon Valley’s engineers and lawyers to allow different legal and cultural conceptions of what may be expressed to coexist on sites used all over the world. But it has been a fidgety hack, requiring awkward accommodations.

In July of 2012, “Sam Bacile,” later identified by the U.S. government as a Coptic Egyptian named Nakoula Basseley Nakoula, uploaded to YouTube two short videos, “The Real Life of Muhammad” and “Muhammad Movie Trailer.” The videos were in English and purported to be trailers for a full-length movie, which has never been released; both depicted the Prophet Muhammad as a womanizer, a homosexual, and a child abuser. As examples of the filmmaker’s art they were clownish. Nakoula, their producer, wished to provoke, but the videos languished on YouTube unseen and might never have been noticed had not Egyptian television, in September, aired a two-minute excerpt dubbed into Arabic. Enterprising souls provided Arabic captions for the videos, soon collectively named The Innocence of Muslims; millions watched them. In reaction, some Muslims rioted in cities all over the world.

The videos placed Google, which owns YouTube, in a difficult position. The company boasts of an official “bias in favor of free expression.” Ross LaJeunesse, its global head of free expression and international relations, says, “We value free speech because we think it’s the right thing to do for our users, and for society at large. We think it’s the right thing for the Internet, and we think it’s the right thing to do for our business. More speech and more information lead to more choices and better decisions for our users.”

American Internet companies have proposed a sunny compromise, a hack designed by Silicon Valley’s engineers.

The U.S. government asked Google to review the videos in order to determine whether they violated the company’s terms of service. A decision doubtless seemed urgent. In September, protesters breached the walls of the U.S. embassy in Cairo and replaced the Stars and Stripes with the black banner of militant Islam. Later that month, an American consulate in Benghazi, Libya, was attacked, and the U.S. ambassador and three other Americans were killed (an event thought at the time to be connected with the videos). By December, 600 people had been injured in demonstrations; 50 to 75 were dead. A consistent demand of the rioters, who mostly lived in countries without free speech and where the government licensed or directly owned the media, was that “America” should remove the videos from YouTube.

What was Google to do? As LaJeunesse explains, the company worries about requests to censor anything; after all, its mission is “to organize all the world’s information.” In general, the company complies with local laws, blocking search results or content illegal within a country, except when laws are so at odds with its corporate principles that senior management feels it cannot operate there. (Such was the case in China, from which Google retreated in March 2010; today the company has a research division inside the country, but search requests are rerouted to Google.com.hk in Hong Kong.) Specific requests to remove information are treated differently depending on whether they affect Google’s search business, its advertising networks, or its platforms, YouTube and Blogger. When a search result is suppressed, Google shows users that the result has been removed. Advertising on Google’s ad networks, AdWords and AdSense, must adhere to the company’s guidelines. Material posted by third parties to YouTube or Blogger must conform to the platforms’ terms of service. (For instance, YouTube’s Community Guidelines prohibit sexually explicit images, hate speech, and “bad stuff like animal abuse, drug abuse, under-age drinking and smoking, or bomb making.”) So much is posted to YouTube and Blogger that the company accepts only limited “intermediary liability,” looking to its community to flag material that violates guidelines. Google is high-mindedly transparent about all requests to remove information: the company publishes a “Transparency Report” that describes requests from copyright owners and governments to remove information from its services (as well as requests from governments and courts to hand over user data).

Google rejected the U.S. government’s request to suppress The Innocence of Muslims, because the videos didn’t violate YouTube’s terms of service. (Although YouTube’s prohibition on hate speech does include any expressions that demean a group “based … on religion,” Google decided that the videos criticized the texts, stories, and prophets of Islam but not Muslims themselves—a nice distinction to the millions of Muslims who felt insulted.) The company did block the videos in Saudi Arabia, India, and Indonesia, where the material was illegal. Other Muslim countries just blocked YouTube altogether. But in the middle of September, Google chose to temporarily restrict access to the videos in Egypt and Libya, because it worried about the “very sensitive situation in those countries.” For slightly less than three weeks, Egyptians and Libyans could not watch The Innocence of Muslims. It was an unprecedented decision: Google had effectively announced that if protesters objected to something with sufficient violence, it would suppress legal speech that was consistent with its community guidelines.

The second event ensnared Twitter in Europe’s idiosyncratic conceptions of free speech. The company has tried to conform to the sunny compromise. In January of 2012, it announced something called “country-withheld content”: it would suppress tweets within a country in response to “a valid and properly scoped request from an authorized entity.” Twitter hopes to be as transparent as Google, too: users must know a tweet has been censored. The first application of the policy was the blocking of Besseres Hannover, an outlawed anti-Semitic and xenophobic organization, in response to a request by the legislature of Lower Saxony. Beginning last October, German followers of Besseres Hannover were shown a grayed-out box with the words “@hannoverticker withheld” and “This account has been withheld in: Germany.” The company’s lawyer, Alex MacGillivray (@amac), tweeted: “Never want to withhold content; good to be able to do it narrowly and transparently.”

Google showed that if protesters objected to something with sufficient violence, it would suppress legal speech.

No one felt much outrage when an obscure Hannoverian neo-Nazi group was put down. But Twitter’s next use of country-withheld content was more troubling, because it was more broadly applied. Not long after the company blocked Besseres Hannover, in response to complaints from the Union of French Jewish Students, it chose to censor tweets within France that used the hashtag “#UnBonJuif” (which means “a good Jew”). French tweeters had been using the hashtag as the occasion for a variety of anti-Semitic gags and protests against Israel. (Samples: “A good Jew is a pile of ash” and “A good Jew is a non-Zionist Jew.”) The tweets were not obscure: when it was taken down, #UnBonJuif was the third-most-popular trending term in France. But expressions of anti-Semitism are actual crimes in the Fifth Republic. The student union therefore asked Twitter to reveal information that could be used to identify the offending tweeters; the company declined, and the union took the case to civil court. In January, the Tribunal de Grande Instance ruled that Twitter must divulge the names of French anti-Semitic tweeters so that they could be prosecuted. The court also ruled that the company should create some mechanism to let its users flag “illegal content, especially that which falls within the scope of the apology of crimes against humanity and incitement to racial hatred” (as already exists on YouTube, for instance). As I write to you, Twitter has two weeks to respond. If the company neither hands over its users’ information nor withdraws from France (neither very likely), then it is unable to apply the sunny compromise consistently. Instead, Twitter will comply with the local laws it finds convenient.

The third event upset observers who value privacy, respect women, and worry about sexual predation. Reddit, an online bulletin board whose links and material are generated by its community of users, hosts forums called “subreddits”; until recently, a number were dedicated to sharing photographs of pubescent and teenage girls. These subreddits were part of a larger trend of websites catering to a taste for images of young women who never consented to public exposure (examples include “self-shots,” where nude photos intended to titillate boyfriends find their way online, and “revenge porn,” sexually explicit photos of women uploaded to the Internet by bitter ex-boyfriends). The two most popular subreddits publishing images of young women, “r/jailbait” and “r/creepshot,” didn’t traffic in illegal child pornography; the forums’ moderator, an Internet troll known as Violentacrez, was much valued by Reddit’s registered users, called “redditors,” for his industriousness in removing unlawful content. (I speak from hearsay; I never visited r/jailbait and r/creepshot.) Instead, the photographs of underage girls showed them clothed or partially clothed, and they were taken in public (where American courts have decided no one has a reasonable expectation of privacy). Inevitably, r/jailbait and r/creepshot attracted wide opprobrium (little wonder: a popular shot was the “upskirt”), and Adrian Chen, a writer at Gawker, identified (or “doxxed”) Violentacrez as Michael Brutsch, a 49-year-old Texan computer programmer. Brutsch promptly lost his job and was humiliated.

The redditors were unhappy, arguing that doxxing Violentacrez violated his privacy and betrayed the principles of free speech to which Reddit was committed. The moderators of r/politics fulminated, without apparent irony: “We feel that this type of behavior is completely intolerable. We volunteer our time on Reddit to make it a better place for the users, and should not be harassed and threatened for that. Reddit prides itself on having a subreddit for everything, and no matter how much anyone may disapprove of what another user subscribes to, that is never a reason to threaten them.”

In retaliation for Chen’s article, the moderators of r/politics deactivated all links to Gawker, a sort of censorship of Gawker within Reddit’s borders. Yishan Wong, the chief executive of Reddit, mildly noted that the delinking “was not making Reddit look so good,” but he insisted in a memo to his users, “We stand for free speech. This means we are not going to ban distasteful subreddits.” Then Reddit banned r/jailbait and similar forums, without revising the site’s rules.

Silicon Valley’s presumptively absolutist standard of free speech, based on a narrow definition of harm, was exported to parts of the world that did not comprehend the standard or else did not want it. In all three cases I describe, the sunny compromise was considered by the parties involved and, under challenge, collapsed.

Free Speech Matters

John Stuart Mill, the Internet itself has a bias in favor of free expression. More, its technologies amplify free speech, widely distributing ideas and attitudes that would otherwise go unheard and cloaking speakers in pseudonymity or anonymity. In order to seem harmless, American Internet companies will fiddle with the sunny compromise, but it is an unsatisfactory hack. The Internet’s amplification of free speech will be resented by those who don’t like free speech, or whose motto is “Free speech for me but not for thee.” It will all be very messy, and sometimes violent. All over the world people hate free speech, because it is a counterintuitive good.

U.S. Internet companies must apply a consistent standard in deciding what they will censor upon request.

Who hates free speech? The powerful and the powerless: ruling parties and established religions, those who would suppress what is said in order to retain power, and those who would change what is said in order to alter the relations of power. Who else? Those who do not wish to be disturbed also hate free speech. Why, they might say, should I care about free speech? I have nothing to say; and insofar as things should be said at all, I only want to hear the things that people like me say. Why should I have to hear things that are offensive, immoral, or even mildly irritating?

In the Liberty you provided answers to those who hate free speech. Your main explanation was bracingly utilitarian, as befitted the son of James Mill. We value free speech, you wrote, because human beings are fallible and forgetful. Our ideas must be tested by argument: wrong opinion must be exposed and truth forced to defend itself, lest it “be held as a dead dogma, not a living truth.” (Your consequentialist followers said a flourishing marketplace of ideas was a precondition of participatory democracy and even of an innovative economy.) But after your youthful crisis of faith, when you rejected your father’s system of thought, you were never a crabbed utilitarian: in your maturity, you always described utility broadly as that which tended to promote happiness, and you defined happiness so that it included intellectual and emotional pleasures. You believed we must be free to “[pursue] our own good in our own way, so long as we do not attempt to deprive others of theirs or impede their efforts to obtain it.” Freedom of expression is both useful and moral, and the consequentialist and deontological justifications of free speech “climb the same mountain from different sides.”

Because free speech is so important, and because the Internet will continue to amplify its expressions, U.S. Internet companies should apply a consistent standard everywhere in deciding what they will censor upon request. (Their terms of service are their own business, so long as they are enforced fairly.) The only principle I can imagine working is yours, where “harm” is interpreted to mean physical or commercial injury but excludes personal, religious, or ideological offense. The companies should obey American laws about what expressions are legal, complying with local laws only when they are consistent with your principle, or else refuse to operate inside a country. In the final analysis, humans, prone to outraged rectitude, need the most free speech they can bear.

Heaven, I know, governs our affairs without a chief executive but with rotating committees of souls. (Vladimir Nabokov and Richard Feynman cochair the Committee on Light and Matter, where Nabokov oversees a Subcommittee on the Motion of the Shadow of Leaves on Sidewalks.) You argue all the time. Down here, we must follow your example although our circumstances are different. We have a right to say whatever we wish so long as we do not harm others, but we cannot compel others to listen, or expect never to be offended.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.