Why Jony Ive Shouldn’t Kill Off Apple’s Skeuomorphic Interfaces

Earlier this week Apple fired Scott Forstall, the architect of its iOS platform, and handed his duties over to the company’s chief industrial designer, Jonathan Ive. Ive and Forstall had an infamously chilly working relationship, and one of their biggest disagreements was over the role of so-called “skeuomorphic” design in Apple’s products. Forstall, like his mentor Steve Jobs, favored it; Ive disliked it. To many observers, Forstall’s forced exit looks like a vindication of Ive’s stance. But if he wants to continue Apple’s enviable trend of innovation, he’d be a fool to throw the baby of skeuomorphism out with Forstall’s bathwater.

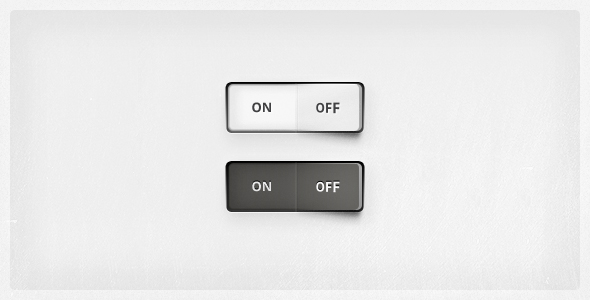

A quick definition for design non-wonks: skeuomorphic UIs are designed to mimic the appearance of physical objects that perform the same function. For example, the iPhone calculator app literally looks like a real-world calculator, complete with Chiclet-shaped buttons arrayed beneath a thin “screen” that displays the numbers. There’s no need for a digital calculator to look like its physical counterpart, of course. That’s the hallmark of skeuomorphism: design elements that were functional and necessary in the “real world” become purely ornamental in the digital one.

It makes sense that Ive would hate skeuomorphism. It’s not a useful solution for any design problem he’s ever faced at Apple before. Physically, Apple’s products must look and feel like the future: sleek, aspirational, with form fused seamlessly and perfectly to function. Ive is justly reknowned for inorexably reducing Apple’s industrial design down to its purest essence. A modern iMac is little more than a thin glass screen hovering on a pedestal. Why should the future of the PC be anything more (or less)? Skeuomorphic nostalgia is antithetical to Ive’s industrial-design ethos, with good reason.

But digital interface design is a whole different animal. Apple’s genius has always been in creating innovative UIs that look and feel like something much better than the future: the familiar, comfortable, comprehensible present. How? Skeuomorphism. From the very first “trashcan” on the 1984 Mac to the glassy faux-3D sheen on the iPhone’s app icons, the s-word has been crucial to advancing and maintaining Apple’s vaunted reputation for “intuitiveness.”

The original iPhone is proof positive that skeuomorphism is incredibly powerful when introducing a genuinely innovative product. Ive’s futuristic hardware made it desirable, but Forstall’s friendly iOS made it approachable for untold millions of consumers who had never before considered buying a smartphone. Without skeuomorphism, iOS might never have become the 800-pound gorilla of mobile computing that it is today.

Five years later, have Forstall (and Steve Jobs, lest we forget) taken skeuomorphism too far? Probably. The much-maligned leather stitching in iCal and torn-paper accents in Notes certainly seem tacky compared to the sci-fi minimalism of Windows Phone’s “Metro” design language. But did it stop Apple from selling more iPhone 5’s than ever. Nope. Windows Phone may look like the future, but iPhone looks like home.

And that’s what Jony Ive would do well to remember as he assumes control over Human Interface at Apple. Rolling back Forstall’s skeuomorphic excesses will burnish Apple’s reputation among tech-tastemakers and create a more consistent user experience for everyone else (whether they notice or not) as iOS and Mac OS continue to converge. But those are mature products where incrementalism has largely replaced innovation. The next domain that Apple disrupts with a truly innovative user experience – television, perhaps – will undoubtedly benefit from a measured dose of Apple’s skeuomorphic special sauce. In fact, it just wouldn’t be Apple without it.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.