Computer Scientists Reproduce the Evolution of Evolvability

Engineers have long known that the best way to build stuff is in modules. If one module goes wrong, it’s then straightforward to replace it. For example, the graphics card on a computer, the alternator in a car or a camera in the Hubble Space Telescope.

By contrast, when a single complex system goes wrong, it’s hard to fix, since all the parts are interdependent. Think of the economy or financial markets.

It might come as no surprise to discover that nature has also learnt this trick. Biological system tend to be modular, particularly those that can be thought of as networks, such as brains, gene regulatory networks and metabolic pathways. (Networks are modular if they contain highly connected clusters of nodes that are only loosely connected to other clusters.)

But that raises an important question: how did biological networks get this way? There must be some evolutionary pressure to form modular networks, but what?

The mystery is compounded because of the advantages that modularity produces. It makes systems more evolvable when conditions change. Since mutations generally influence a single module, they lead to specific small changes to the system’s fitness. Evolution easily selects for or against these changes.

Without modularity, systems cannot evolve easily because mutations tend to influence the entire system in dramatic ways that are rarely advantageous.

Indeed, various experiments and simulations have shown that modular systems are more evolvable than non-modular systems.

Modularity gives a clear advantage but only when it already exists. This doesn’t explain why it evolves in the first place.

There is no shortage of ideas, however. For example, one theory is that modularity evolves in rapidly changing environments that have common subproblems but different overall problems. However, there is little evidence that real environments are like this.

For this reason the evolution of modularity is one of biology’s most important open questions.

Today, Hod Lipson at Cornell University in Ithaca and a couple of buddies, say they’ve cracked it. These guys say the key factor that has been unappreciated until now is the cost of creating and maintaining a network. “Modularity evolves not because it conveys evolvability, but as a byproduct from selection to reduce connection costs in a network,” they say.

These costs are things like the cost of manufacturing connections and maintaining them, the energy required to transmit information along them and the signal delays that occur. All of these things increase with the number of connections and their length.

“The strongest evidence that biological networks face direct selection to minimize connection costs comes from the vascular system and from nervous systems, including the brain, where multiple studies suggest that the summed length of the wiring diagram has been minimized,” they say.

Clearly there are important advantages in reducing the costs of running a network.

To test this idea, Lipson and co created a computer environment that could measure the fitness of various networks in carrying out a straightforward task.: recognising a simple pattern of inputs.

To start off, the networks are random and none perform particularly well. But by chance, some perform a little better than others and these were preferentially reproduced in the next generation. However, the next generation is not an exact copy of the first but includes some random changes

This process–known as evolutionary computing– exactly reproduces the process of evolution.

However, a crucial factor is the criteria the computer uses to evaluate the fitness of each generation of networks.

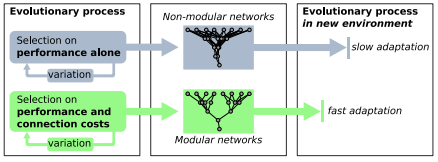

Lipson and co carried out this simulation using two different criteria. The first was a simple measure of whether a network was better at recognising the input pattern or not. However, the second criterion also took into account the cost of running the network, with more efficient networks being fitter.

The results are significant. Lipson and co say that both fitness criteria produce networks capable of accurately identifying the input patterns after 25,000 generations. However, the second criterion produces modular networks while the first does not.

That’s clear and simple evidence that modularity emerges when network costs are taken into account. And of course in a world of limited resources, network costs are always a factor. An important byproduct is that modularity makes a system more evolvable but this is not the factor generates modularity in the first place.

That explains for the first time one of nature’s most important organising principles.

Lipson and co’s work has important implications. In the last 20 years, engineers have begun to use evolutionary computing for ever more complex challenges, everything from analysing x-ray images and data sets to designing manufacturing schedules and parts for supersonic aircraft.

But while engineers have known the importance of modularity, they’ve never known how to evolve it.”Knowing that selection to reduce connection costs produces modular networks will substantially advance fields that harness evolution for engineering,” say Lipson and co.

It should also make it easier for engineers to understand synthetically evolved systems, which can often solve problems without any human knowing how.

There’s clearly more to come here and it will be fascinating stuff!

Ref: arxiv.org/abs/1207.2743: The Evolutionary Origins Of Modularity

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.