Fundamental Law of High Speed Flying Maneuverability Discovered

The extraordinary ability of birds and bats to fly at speed through cluttered environments such as forests has long fascinated humans. It raises an obvious question: how do these creatures do it?

Clearly they must recognise obstacles and exercise the necessary fine control over their movements to avoid collisions while still pursuing their goal. And they must do this at extraordinary speed.

From a conventional command and control point of view, this is a hard task. Object recognition and distance judgement are both hard problems and route planning even tougher.

Even with the vast computing resources that humans have access to, it’s not at all obvious how to tackle this problem. So how flying animals manage it with immobile eyes, fixed focus optics and much more limited data processing is something of a puzzle.

Today, Ken Sebesta and John Baillieul at Boston University reveal how they’ve cracked it. These guys say that flying animals use a relatively simple algorithm to steer through clutter and that this has allowed them to derive a fundamental law that determines the limits of agile flight.

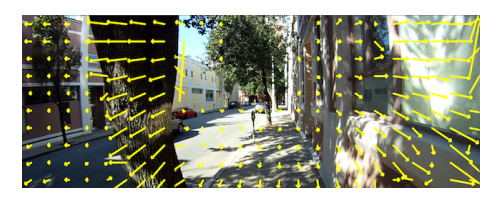

Their approach relies on an idea called optical flow sensing which has been the subject of growing attention in recent years. The idea here is to think of the field of view, not as a set of discrete objects at different distances, but simply as an array of points that move across the field of vision.

The rate of movement across the field of view depends on factors such as the size and distance of the object as well as the speed of flight.

However, the optics of eyesight significantly simplifies certain calculations about this system. In particular, it allows a very simple determination of an imminent collision.

It turns out that, given an eyeball flying at a constant velocity towards an object, the rate of change of the object’s image size on the eyeball retina determine’s the time to impact. That’s a simple calculation that requires no knowledge of the object’s size, distance or even of the closing speed.

It then becomes relatively straightforward to determine when a collision is imminent and to adjust course accordingly. That’s something that can be done with direct feedback from the optical system in a highly efficient way.

The work that Sebesta and Baillieul have done is to generalise this calculation for any point in the visual field and to calculate not just when a collision is imminent but when the eyeball passes the object.

They then apply this method to the visual field as a whole to determine when collisions are likely and to create a control system that allows course adjustments to be made.

Their conclusion is that optical flow approach leads to a fundamental limit on the agility of high speed flight. The factors that determine this are the size and density of the obstacles in the clutter field and a quantity that Sebesta and Baillieul call the steering authority, essentially the flier’s turning radius.

It turns out that there is a critical level of manoeuvrability. “it is shown that there are critical levels of steering authority, slightly below which it is almost impossible to transit an obstacle field and slightly above which it is almost certain the there will be a realizable collision-free path,” they say.

That’s a fascinating result. It places a fundamental limit on the ability of any flier to navigate an environment at speed. At it also allows the development of relatively straightforward algorithm for achieving this limit, or something close to it, using image data feedback.

Indeed, Sebesta and Baillieul are already exploiting this in a custom-built UAV based on the popular quadcopter airframe, equipped with motion sensors, an onboard camera, and a Gumstix Fire Single Board Computer. “Our lab is currently conducting indoor free flight tests together with selected tethered outdoor flight tests,” they say.

That opens up the possibility of autonomous micro-air vehicles swooping and diving through cluttered environments like sparrowhawks through a forest. And doing it in the not too distant future.

Ref: arxiv.org/abs/1203.2816: Animal-Inspired Agile Flight Using Optical Flow Sensing

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.