Brain Scans Teach Humans to Empathize with Bots

When we watch a human express a powerful emotion - anger, fear, disgust - big sections of our brains light up, including so-called “mirror neurons,” which are unique because they fire both when we produce a given action and when we perceive it in others. They are the basis of what neuroscientists call Resonance.

Resonance describes the mechanism by which the neural substrates involved in the internal representation of actions, as well as emotions and sensations, are also recruited when perceiving another individual experiencing the same action, emotion or sensation.

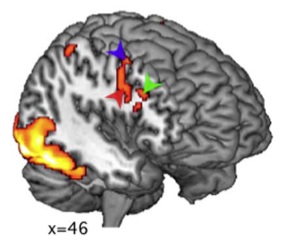

In order to test whether the sections of the brain that are activated when a human sees a robot expressing powerful emotions are the same as when a human sees another human expressing them, an international group of researchers stuck volunteers into an fMRI machine - which can, with limited spatial and temporal resolution, determine which parts of your brain are active at any given time - and played them clips of humans and robots making identical facial expressions.

On a very basic level, the researchers were asking whether humans empathize with even obviously mechanical robots.

The results, published last week in the journal PLoS ONE, were about what you would expect: in a default scenario in which participants were told to concentrate on the motion of the facial gesture itself, their brains showed significantly reduced activation when they watched robots expressing emotion, as compared to humans doing the same thing.

But a funny thing happened when they were told to concentrate on the emotional content of the robots’ expressions: their brains evidenced significantly increased activity, including the areas that contain mirror neurons.

So when humans are asked to think about what a robot expressing an emotion might be feeling, we are instantly more likely to empathize with them. The very question - please concentrate on what the robot is feeling - presupposes that the robot even has emotions.

Whether or not the robot is actually feeling something is therefore up to us - it depends on our beliefs about the sentience or non-sentience of the robot. It’s not hard to convincingly simulate at least an animal level of emotion in robots with even the most primitive gestural vocabulary - that’s the basis of the success of robotic therapy as carried out with, for example, the robotic baby seal Paro.

Below, I’ve embedded the very same video which participants were shown when they were in the fMRI scanner. The robot itself is barely recognizable as human, and its gestures even less so, which makes it all the more intriguing that participants were able to imagine, just for an instant, that it has feelings, too. What might an even more humanoid - or more familiar - robot accomplish?

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.