Android-Powered Sensors Monitors Vital Signs and More

In science fiction films from Aliens to Avatar, commanders back at the base station always know when soldiers of the future get taken out by hostiles–because their vital signs are being monitored in real time. Doing that with present-day technology is a challenge, not least because collecting and transmitting all of the data that can be gathered by even a handful of motion and vital-signs sensors would be a huge drain on battery power and wireless bandwidth.

By equipping the clothing and bodies of users with a mesh of multiple sensors - known as “smart dust” - that report to an Android-powered phone, researchers are pioneering an open-source route to realizing the dream of always-on medical monitoring. Their work has already allowed them to measure how much test subjects exercise, how well their hearts are doing and how much air pollution they’re being exposed to.

The resulting data have a number of applications:

- Incorporation of historical and real-time data on vital signs into permanent medical records

- Automatically inform a patient when to adjust their heart medication

- Turn exercise and daily activity levels into a Foursquare-style competition

- Allow users to avoid locations and times of day when air pollution is worst

The technology (pdf) is described in a paper to be delivered in late June at the 2010 International conference on Pervasive technologies for assistive environment in Samos, Greece. It outlines a hierarchy of processing steps that make 24/7 monitoring of vital signs (such as breathing and heart rate) realistic given the battery life issues and bandwidth constraints of mobile phones

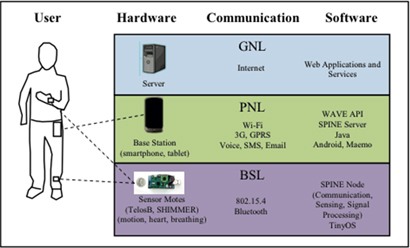

This hierarchy, known as DexterNet, includes sequential processing at each level of the hardware involved: the sensors, known as the body sensor layer, the smartphone or personal network layer, and finally in the “cloud” or global network layer that backs up and does final processing of all of the user’s data. The purpose of in-device processing in each layer is the reduction of the amount of information transmitted wirelessly between each device.

The lowest level of this hierarchy, individual sensors on the user’s limbs and torso, can gather data on a number of parameters: motion in 3 axes (realized with a three-axis accelerometer and a two-axis gyroscope), heart ECG, levels of airborne particulate matter, and, for breathing movements, “electrical impedance pneumography.”

To reduce the frequency with which these sensors must communicate with the user’s smartphone (and the volume of information they have to transmit) these sensors are capable of basic signal-processing algorithms across a programmer-definable time period, including minimum, maximum, average and mean values for any particular parameter.

Two types of sensors were used, one, known as the TelosB, is about the size of a USB thumb drive, and sports a Texas Instruments processor often found in embedded applications and 10k of integrated RAM. The other, Intel’s SHIMMER sensor, runs the TinyOS operating system designed specifically for remote sensors, weighs only 15 grams and is not much bigger than a quarter.

Led by Edmund Seto of the School of Public Health at UC Berkeley, the researchers involved were able to further integrate data gathered from the wireless sensors with data gathered by the phones themselves. By combining location, time of day and air-quality data, for example, the researchers were able to create maps of user’s days that highlight the places and times when they were exposed to greatest levels of air pollution.

Because phones and sensors can communicate with each other wirelessly via Bluetooth, the number of sensors that can be embedded both on a user and in his or her environment is practically limitless. In one application, the researchers put a sensor into the digital bathroom scale of users and their blood pressure monitors to quantify daily changes related to too much fluid retention in patients. The resulting data allowed their algorithms, processed in by the server to which the smartphone sends its data, to suggest possible modification of dosage of blood pressure medication.

Seto et al. cited the Android platform as a unique enabler of their work, not only because Android phones, like all smart phones, are fairly capable wearable computers in their own right. Because Android is open-source, the researchers were able to develop on top of it using the SPINE platform for remote sensing, and to add to it their own API, known as WAVE (not to be confused with Google’s Wave). In combination, these research platforms allow them free reign to experiment.

So far the only drawback to using the Android platform in this work, note the researchers, is that it can’t locate users indoors. The researchers spend a portion of their paper trying to re-invent the wheel by speculating about ways to accomplish this via the use of Wifi nodes and even visual recognition of interior spaces using the phone’s camera, without ever realizing, apparently, that Skyhook Wireless already has an API and an international database of wifi networks that can accomplish this.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.