Extending the Life of Quantum Bits

Quantum computing holds great promise as a way to factor huge numbers, potentially breaking ultra-secure cryptographic codes unbreakable by traditional computers. However, this promise has historically been tempered by practical concerns: quantum computers rely on particles and molecules that are extremely sensitive to the environment; therefore, any such system only works for milliseconds, and the more particles and ions are added to a system, the quicker its ability fades.

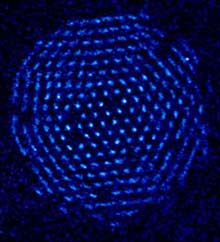

But now researchers at the National Institute of Standards and Technology (NIST) have demonstrated, for the first time, that the lifetime of quantum-computing bits, known as qubits, can be extended using simple operations. In their experiment, they showed that by applying specially timed magnetic pulses to qubits, made of beryllium ions, they could prolong the life of the quantum bits from about one millisecond to hundreds of milliseconds. The work is described in this week’s Nature.

“The worst thing about quantum information from an experimental perspective,” says Michael Biercuk, a researcher at NIST, “is that even if you do nothing to your qubit, just its interaction with the environment does something to it.” Qubits, he explains, are dependent on the quantum magic of superposition, in which certain properties of a quantum system exist in two or more distinct states at once. Superposition is a fleeting thing, and it quickly starts to break down, or decohere, due to noise such as random electrical fluctuations in the environment, says Biercuk. But what Biercuk and his colleagues John Bollinger and Herman Uys have done is “mitigate the effects of decoherence.”

This means that the researchers have bought some time to do more complex experiments, such as modeling quantum states of large molecules, says Biercuk. It also means that they could add more qubits to the system, essentially providing more computational horsepower, and still have enough time to perform some experiments. Additionally, notes Biercuk, the team showed that it’s possible to use the approach for different types of quantum-computing systems, such as those that are built in a semiconducting material like silicon. In other words, the researchers have provided a general solution to a problem that plagues all researchers who work on quantum computers.

The average home computer is considered a classical computer, and it works in a straightforward way: information is processed in discrete chunks–bits that are represented, in computer language, as either a 1 or a 0. Conversely, quantum computers process information by using qubits, which register a 1and a 0 at the same time. This subtle difference gives quantum computers exponentially more power than classical computers.

Small-scale quantum computers–those that deal with a handful of qubits–have existed for a number of years. In the 1990s, says Sankar Das Sarma, a professor of physics at the University of Maryland, researchers proposed that the error caused by decoherence could be reversed after the fact using software, but that is still just a theory. Researchers tried to reduce the decoherence error by shielding their systems as best they could from environmental fluctuations. Eventually, researchers proposed theories in which error from decoherence could be lessened in the hardware of a quantum computer itself. However, Das Sarma says, it was thought to be too tricky to implement experimentally.

Biercuk explains that he and his colleagues borrowed some ideas for their research from the nuclear magnetic resonance research community, which has been around for decades but whose ideas were never applied specifically to quantum-computational systems. To implement the technique, the researchers measure and are aware of the characteristic environmental noise; knowing that, they can apply a series of magnetic pulses to their qubits at precise intervals to snap them back into a state of superposition. They’ve created different noise conditions that are common in other quantum-computing systems, such as those made in silicon, and modified the timing of the pulses accordingly, to prove that they work in those instances as well.

“It’s a nice technique,” says Seth Lloyd, a professor of mechanical engineering at MIT. “They took some well-known techniques from nuclear magnetic resonance, juiced them up, and turbocharged them.” In the near term, says Lloyd, the technique could be used for improving the accuracy of atomic clocks. “In the long term,” he says, “you might be able to use this to make a better quantum computer.”

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.