10 Breakthrough Technologies 2012

Emerging Technologies: 2012

These are the 10 most important technological milestones reached over the previous 12 months. To compile the list, the editors of MIT Technology Review select the technologies we believe will have the greatest impact on the shape of innovation in years to come. This impact can take very different forms, but in all cases, these are breakthroughs with the potential to transform the world.

10 Breakthrough Technologies

Solar Microgrids

Village-scale DC grids provide power for lighting and cell phones.Rural Indians are replacing kerosene lamps with cheaper and cleaner LEDs. Nearly 400 million Indians, mostly those living in rural communities, lack access to grid power. For many of them, simply charging a cell phone requires a long trip to a town with a recharging kiosk, and their homes are dimly lit by sooty kerosene-fueled lamps.

To change that, Nikhil Jaisinghani and Brian Shaad cofounded Mera Gao Power. Taking advantage of the falling cost of solar panels and LEDs, the company aims to build and operate low-cost solar-powered microgrids that can provide clean light and charge phones. Microgrids distribute electricity in a limited area from a relatively small generation point. While alternative solutions, such as individual solar-powered lanterns, can also provide light and charge phones, the advantage of a microgrid is that the installation cost can be spread across a village. The system can also use more efficient, larger-scale generation and storage systems, lowering operational costs.

Mera Gao’s first commercial microgrid was deployed last summer, and eight more villages have been added since; there are plans to expand to another 40 villages this year with the help of a $300,000 grant from the U.S. Agency for International Development. The company has also encouraged others to enter the Indian market for off-grid renewable energy, which the World Resources Institute, a think tank based in Washington, DC, estimates at $2 billion per year.

A typical installation uses two banks of solar panels, located on different rooftops.For a cost of $2,500, a hundred households, in groups of up to 15, can be wired up to two generation hubs, each consisting of a set of solar panels and a battery pack. The grid uses 24-volt DC power throughout, which permits the use of aluminum wiring rather than the more expensive copper wiring required for higher-voltage AC distribution systems. The village is carefully mapped before installation to ensure the most efficient arrangement of distribution lines. (Circuit breakers will trip if a freeloader tries to tap in.) “This mapping and design is our biggest innovation,” Jaisinghani says.

Each household gets 0.2 amps for seven hours a night—enough to power two LED lights and a mobile-phone charging point—for a prepaid monthly fee of 100 rupees ($2); kerosene and phone charging generally cost 100 to 150 rupees a month.

Jaisinghani says Mera Gao’s microgrid is not a replacement for grid power, but it’s what people want and can pay for right now. Currently the technology supports only lighting and phone charging, but the company is exploring ideas such as community entertainment centers where the costs of television, radio, cooling fans, and information services are spread across a group of homes rather than being paid by a single user.

Light-Field Photography

Lytro reinvented the camera so that it can evolve faster.This March brought the first major update to camera design since the dawn of cheap digital photography: a camera that lets you adjust the focus of an image after you’ve taken the picture. It is being sold for $399 and up by Lytro, a startup based in Silicon Valley that plans to use its technology to offer much more than the refocusing trick—options like making 3-D images at home.

All consumer cameras create images using a flat plate—whether chemical film or a digital sensor—to record the position, color, and intensity of the light that comes through a lens. Lytro’s camera does all that, but it also records the angle at which rays of light arrive (see graphic). The resulting files aren’t images but mini-databases capturing the three-dimensional pattern of light, called a light field, at a particular moment. Software can mine that database to produce many different possible photos and visual effects from one press of the shutter.

Lytro has wrapped its technology in a consumer-friendly package, making this new form of photography more likely to catch on.Light-field cameras existed before, but they had limited industrial uses and were never cheap enough for consumers. Lytro founder Ren Ng, who worked on light-field technology for his PhD at Stanford University, made this one affordable by simplifying the design. Instead of using multiple lenses, which made previous light-field cameras expensive and delicate, Ng showed that laying a low-cost plastic film patterned with tiny microlenses on top of a regular digital sensor could enable it to detect the direction of incoming light.

Recording the entire light field entering the camera means that images can be focused after the fact: a user can choose near, far, or any focus in between.Refocusing images after they are shot is just the beginning of what Lytro’s cameras will be able to do. A downloadable software update will soon enable them to capture everything in a photo in sharp focus regardless of its distance from the lens, which is practically impossible with a conventional camera. Another update scheduled for this year will use the data in a Lytro snapshot to create a 3-D image. Ng is also exploring a video camera that could be focused after shots were taken, potentially giving home movies a much-needed boost in production values.

Images from Lytro cameras can be shared on websites and Facebook in a way that allows other people to experiment with changing the focus to explore what the photographer captured. This kind of flexibility is so appealing, Ng says, that “in the future, all cameras will be light-field-based.”

Egg Stem Cells

A recent discovery could increase older women’s chances of having babies.Jonathan Tilly may have discovered a way to slow the ticking of women’s biological clocks. In a paper published in March, the Harvard University reproductive biologist and his colleagues reported that women carry egg stem cells in their ovaries into adulthood—a possible key to extending the age at which a woman might have a baby. Today, a woman’s fertility is limited by her total supply of eggs and by the diminished quality of those eggs as she reaches her 40s. Tilly’s work with the stem cells—cells that can differentiate, or develop into other kinds of cells—could address both issues. For one thing, it’s possible that these newly discovered cells could be coaxed to develop into new eggs. And even if not, he says, they could be used to rejuvenate an older woman’s existing eggs.

Tilly first found egg stem cells in mice in 2004. Once he identified egg stem cells in ovarian tissue from adult women, he isolated the cells and injected them into human ovary tissue that was then transplanted into mice. There the cells differentiated into human oocytes, the immature egg cells that mature, one at a time, at ovulation. Tilly didn’t take these oocytes any further, but he says he has gotten egg stem cells from mice to generate functional mouse eggs that were fertilized and exhibited early embryonic development.

The research is still a long way from creating a crying human newborn. Nevertheless, the paper “changes what we understand” about fertility, says Tilly, who also directs a center for reproductive biology at Massachusetts General Hospital. Though some of Tilly’s peers remain dubious that the cells he’s found in women’s ovarian tissue are actually stem cells or could become functional egg cells, many find the research provocative. “I think this is a very intriguing leap,” says Elizabeth McGee, an associate professor and head of reproductive endocrinology and infertility at Virginia Commonwealth University. “However, I think there’s still a long way to go before this becomes a useful product for women.”

Boston-based OvaScience, which is commercializing Tilly’s work, hopes it won’t be too long. The company’s cofounders include venture capitalist Christoph Westphal and Harvard antiaging researcher David Sinclair, who founded Sirtris Pharmaceuticals and sold it to GlaxoSmithKline for $720 million in 2008. OvaScience has raised $43 million to pursue fertility treatments and other applications for the stem cells.

One of the more tantalizing implications is that this technology could be used to reclaim the youth of an older woman’s eggs. Tilly says he can do this by transferring mitochondria—the cell’s power source—from the stem-cell-derived cells into the existing eggs. Researchers who tried something similar in the 1990s, with the help of young donors, found that mitochondria from the donors’ egg cells could improve the viability of older eggs. But the nearly 30 children who resulted from this work ended up with DNA from two women as well as their father. (It’s not clear whether the children suffered any health consequences.) By being her own source for the younger mitochondria, a woman could avoid that potentially dangerous mix of DNA, Tilly says.

David Albertini, director of the Center for Reproductive Sciences at the University of Kansas Medical Center and a member of OvaScience’s advisory board, says he “can’t wait to get [his] hands on” Tilly’s cells for his own egg research. But he says it’s too soon to consider implanting them in women before much more testing is done in mice.

Ultra-Efficient Solar

Under the right circumstances, solar cells from Semprius could produce power more cheaply than fossil fuels.Semprius’s solar panels use glass lenses to concentrate incoming light, maximizing the power production of tiny photovoltaic cells. This past winter, a startup called Semprius set an important record for solar energy: it showed that its solar panels can convert nearly 34 percent of the light that hits them into electricity. Semprius says its technology, once scaled up, is so efficient that in some places, it could soon make electricity cheaply enough to compete with power plants fueled by coal and natural gas.

Because solar installations have many fixed costs, including real estate for the arrays of panels, it is important to maximize the efficiency of each panel in order to bring down the price of solar energy. Companies are trying a variety of ways to do that, including using materials other than silicon, the most common semiconductor in solar panels today.

For example, a startup called Alta Devices (see the TR50, March/April 2012) makes flexible sheets of solar cells out of a highly efficient material called gallium arsenide. Semprius also uses gallium arsenide, which is better than silicon at turning light into electricity (the record efficiency measured in a silicon solar panel is about 23 percent). But gallium arsenide is also far more expensive, so Semprius is trying to make up for the cost in several ways.

A new mass-production process makes high-efficiency gallium arsenide a more cost-effective photovoltaic material.One is by shrinking its solar cells, the individual light absorbers in a solar panel, to just 600 micrometers wide, 600 micrometers long, and 10 micrometers thick. Its manufacturing process is built on research by cofounder John Rogers, a professor of chemistry and engineering at the University of Illinois, who figured out a way to grow the small cells on a gallium arsenide wafer, lift them off quickly, and then reuse the wafer to make more cells. Once the cells are laid down, Semprius maximizes their power production by putting them under glass lenses that concentrate sunlight about 1,100 times.

Concentrating sunlight on solar panels is not new, but with larger silicon cells, a cooling system typically must be used to conduct away the heat that this generates. Semprius’s small cells produce so little heat that they don’t require cooling, which further brings down the cost. Scott Burroughs, Semprius’s vice president of technology, says utilities that use its system should be able to produce electricity at around eight cents per kilowatt-hour in a few years. That’s less than the U.S. average retail price for electricity, which was about 10 cents per kilowatt-hour in 2011, according to the U.S. Energy Information Administration.

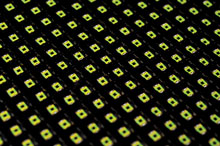

The gallium arsenide is the black square on each cell. Using such small amounts of the expensive material keeps costs down.Semprius’s advantages are tempered by the limitations of using lenses to concentrate light: the system works best when the cells receive direct sunlight under a cloudless sky, and energy production drops significantly under any other conditions. Even so, it could be suitable for large, utility-scale projects in places such as the American Southwest.

First, however, Semprius has to begin mass-producing its panels. The company, which has raised about $44 million from venture capital firms and Siemens (which builds solar power plants), plans this year to open a small factory in North Carolina that can make enough solar panels annually to deliver six megawatts of electricity. The company hopes to expand that to 30 megawatts by the end of 2013, but to do so it must raise an undisclosed amount of money in an atmosphere that is no longer kind to capital-intensive energy startups.

All the while, Semprius will also have to reduce its manufacturing costs fast enough to compete with conventional silicon panels, whose prices fell by more than half in 2011 alone.

3-D Transistors

Intel creates faster and more energy-efficient processors.The new transistors (above) have vertical current-carrying channels. In older designs (inset), the channels lie flat under the gates.

In an effort to keep squeezing more components onto silicon chips, Intel has begun mass-producing processors based on 3-D transistors. The move not only extends the life of Moore’s Law (the prediction that the number of transistors per chip will double roughly every two years) but could help significantly increase the energy efficiency and speed of processors.

The on-and-off flow of current in conventional chips is controlled by an electric field generated by a so-called gate that sits on top of a wide, shallow conducting channel embedded in a silicon substrate. With the 3-D transistors, that current-carrying channel has been flipped upright, rising off the surface of the chip. The channel material can thus be in contact with the gate on both its sides and its top, leaving little of the channel exposed to interference from stray charges in the substrate below. In earlier transistors, these charges interfered with the gate’s ability to block current, resulting in a constant flow of leakage current.

With virtually no leakage current, a transistor can switch on and off more cleanly and quickly, and it can be run at lower power, since designers don’t have to worry that leakage current could be mistaken for an “on” signal.

Intel claims the new transistors can switch up to 37 percent faster than its previous transistors or consume as little as half as much power. Faster switching means faster chips. In addition, because of their smaller footprint, the transistors can be packed closer together. Signals thus take less time to travel between them, further speeding up the chip.

The first processors based on the technology will shortly appear in laptops. But the electronics industry is especially excited by the prospect of conserving power in handheld devices. That means designers can upgrade the performance of a device without requiring bulkier batteries, or reduce battery size without lowering performance. “Ten years ago everyone only cared about making chips faster,” says Mark Bohr, who heads process technology at Intel. “Today low-power operation is much more important.” He adds that the power savings and performance gains will be magnified in handheld devices because the smaller transistors will make it possible for a single chip to handle functions such memory, broadband communications, and GPS, each of which used to require its own chip. With fewer chips and smaller batteries, gadgets will be able to do more in tinier packages.

The new transistor design leaves room for enough further improvement to see the industry through the next five years. Intel’s previous chips could pack in 4.87 million transistors per square millimeter; the new chips have 8.75 million, and by 2017, about 30 million transistors per square millimeter should be possible. “This buys silicon another few generations,” says Bohr.

Crowdfunding

Kickstarter is funding the commercialization of new technologies.Entrepreneurs can post videos and pictures on Kickstarter to attract pledges for projects. Some success stories (clockwise from top left): Elevation iPhone dock $1,460,000 Double Fine Adventure (video game) $3,330,000 Twine Wi-Fi sensors $557,000 CloudFTP wireless thumb drive hub $262,000 PrintrBot 3-D printer $831,000 The Order of the Stick (comic book) $1,250,000 Kickstarter, a New York City–based website originally founded to support creative projects, has become a force in financing technology startups. Entrepreneurs have used the site to raise hundreds of thousands of dollars at a time to develop and produce products, including a networked home sensing system and a kit that prints three-dimensional objects (see Hack).

This crowdfunding model offers an alternative to traditional means of raising startup funds for some types of businesses, such as Web or design firms. Startups keep their equity, maintain full control over strategy, and gain a committed community of early adopters to boot.

While most projects ask for relatively small amounts, several have exceeded the $1 million mark. Most notably, Double Fine Productions raised over $3 million to develop a video game. That’s well beyond the typical angel stake, which generally tops out at $600,000, and into the realm of the typical venture capital infusion.

Overall, Kickstarter users pledged nearly $99.3 million for projects last year—an amount roughly equivalent to 10 percent of all seed investment in the United States, which PricewaterhouseCoopers estimates at $920 million.

People seeking to raise money for a project set a funding target and solicit pledges. If a project fails to reach its target (as happened to about 54 percent of them in 2011), supporters pay nothing. For projects that do hit their target, donors receive a variety of rewards, including thank-you notes, products, or even elaborate packages that might include a visit to the creators’ place of work. Kickstarter, which was launched in 2009 by Yancey Strickler, Charles Adler, and Perry Chen, takes a 5 percent cut. Since the launch, the site has distributed over $150 million.

Kickstarter’s role could begin to shift with the end of the U.S. ban on private companies’ selling equity to small investors, which was lifted in April. Says Paul Kedrosky, a senior fellow at the Kauffman Foundation who focuses on risk capital: “If crowdfunding sites start offering equity shares, it will make a few dozen VC firms disappear.”

A Faster Fourier Transform

A mathematical upgrade promises a speedier digital world.Piotr Indyk, Dina Katabi, Eric Price, and Haitham Hassanieh (left to right) have created a faster way to break down complex signals into combinations of simple waves for processing. In January, four MIT researchers showed off a replacement for one of the most important algorithms in computer science. Dina Katabi, Haitham Hassanieh, Piotr Indyk, and Eric Price have created a faster way to perform the Fourier transform, a mathematical technique for processing streams of data that underlies the operation of things such as digital medical imaging, Wi-Fi routers, and 4G cellular networks.

The principle of the Fourier transform, which dates back to the 19th century, is that any signal, such as a sound recording, can be represented as the sum of a collection of sine and cosine waves with different frequencies and amplitudes. This collection of waves can then be manipulated with relative ease—for example, allowing a recording to be compressed or noise to be suppressed. In the mid-1960s, a computer-friendly algorithm called the fast Fourier transform (FFT) was developed. Anyone who’s marveled at the tiny size of an MP3 file compared with the same recording in an uncompressed form has seen the power of the FFT at work.

With the new algorithm, called the sparse Fourier transform (SFT), streams of data can be processed 10 to 100 times faster than was possible with the FFT. The speedup can occur because the information we care about most has a great deal of structure: music is not random noise. These meaningful signals typically have only a fraction of the possible values that a signal could take; the technical term for this is that the information is “sparse.” Because the SFT algorithm isn’t intended to work with all possible streams of data, it can take certain shortcuts not otherwise available. In theory, an algorithm that can handle only sparse signals is much more limited than the FFT. But “sparsity is everywhere,” points out coinventor Katabi, a professor of electrical engineering and computer science. “It’s in nature; it’s in video signals; it’s in audio signals.”

A faster transform means that less computer power is required to process a given amount of information—a boon to energy-conscious mobile multimedia devices such as smart phones. Or with the same amount of power, engineers can contemplate doing things that the computing demands of the original FFT made impractical. For example, Internet backbones and routers today can actually read or process only a tiny trickle of the river of bits they pass between them. The SFT could allow researchers to study the flow of this traffic in much greater detail as bits shoot by billions of times a second.

Nanopore Sequencing

Simple and direct analysis of DNA will make genetic testing routine in more situations.Oxford Nanopore has demonstrated commercial machines that read DNA bases directly. The technology offers a way to make genome sequencing faster, cheaper, and potentially convenient enough to let doctors use sequencing as routinely as they order an MRI or a blood cell count, ushering in an era of personalized medicine.

The company’s devices, which eliminate the need to amplify DNA or use expensive reagents, work by passing a single strand of DNA through a protein pore created in a membrane. An electric current flows through the pore; different DNA bases disrupt the current in different ways, letting the machine electronically read out the sequence.

Rival sequencing technologies have gotten faster and cheaper in recent years as well. But most of them either use fluorescent reagents to identify bases or require chopping up the DNA molecule and amplifying the fragments. Nanopore’s technology is simpler, and it avoids errors that can creep in during these steps.

Being able to read DNA molecules directly also means that longer segments of a genome can be read at a time. This makes it easier for researchers to see large-scale patterns such as translocations, in which chunks of DNA are displaced from one part of the genome to another, and copy number variations, in which DNA sequences are repeated several times or more. (Translocations are thought to underlie various forms of cancer and other diseases, while copy number variations are linked to a range of neurological and developmental disorders.)

The company reports reading a stretch of DNA roughly 48,000 bases long. “That’s by far the longest piece of DNA that anyone’s claimed to read,” says Jeffery Schloss, program director for technology development at the National Human Genome Research Institute.

Oxford Nanopore’s new product line (which will begin shipping later this year) will include a miniaturized portable device, roughly the size of two decks of cards, that can plug directly into a computer’s USB port and is capable of sequencing small volumes of DNA. A larger desktop machine can handle larger volumes; clusters of these machines will be used for sequencing whole genomes. Although the company has not yet announced pricing for the desktop machine, the portable version could cost less than $900. This device will make it easier to read limited amounts of DNA in a host of settings, including remote clinics or food processing plants, where inspectors could monitor for contamination by dangerous strains of bacteria.

Facebook’s Timeline

The social-networking company is collecting and analyzing consumer data on an unprecedented scale.Facebook recently introduced its Timeline interface to its 850 million monthly active users. The interface is designed to make it easy to navigate much of the immense amount of information that the social network has gathered about each of its users—and to prompt them to add and share even more in a way that’s easy to analyze.

Facebook’s motivation is to better target the advertisements that are responsible for 85 percent of its revenue. In part, successful targeting is a numbers game. If reported trends have held steady, Facebook’s data warehouse was adding 625,000 terabytes of compressed data daily by last January. Timeline’s new features are bound to boost that number dramatically, potentially providing Facebook with more personal data than any other ad seller online can access.

In the past, much of the data that users contributed to Facebook was in the form of unstructured status updates. The addition of a “Like” button, and the ability to link that button to third-party websites (see “TR10: Social Indexing,” May/June 2011), provided somewhat more fine-grained information that could be used for targeting ads. Timeline goes well beyond that, prompting users to add an extensive array of metadata to their updates, which makes mining value much easier. And by design, it encourages users to revisit and add more information to old updates, or retroactively add completely new biographical information.

One way Timeline gets users to add marketable meaning is by asking them to categorize their updates under a broad collection of “Life Events,” which includes tags for actions like buying a home or vehicle. A user who notes a vehicle purchase is prompted to specify details such as the type, make, and model year of the car, along with when and where the purchase was made and whom the user was with at the time. Connecting the dots, Facebook may determine the gender, income bracket, educational level, and profession of the kind of person likely to buy a specific car.

This growing trove of data is a bonanza to marketers, but it’s also a challenge for Facebook, which must keep up with the flood of bits. Approximately 10 percent of Facebook’s revenue is devoted to R&D, including efforts to improve the speed, efficiency, and scalability of its infrastructure. If previous spending patterns hold true, much of the company’s 2012 capital-expense allocation—more than $1.6 billion—is likely to be devoted to servers and storage devices.

Timeline is making real the concept of the “permanent record,” in the form of a computer-assisted autobiography—a searchable multimedia diary of our lives that hovers in the cloud. But it may also have an unintended effect of calling users’ attention to just how much Facebook knows about them. Normally, “when people share information about themselves, they see a snapshot,” says Deirdre Mulligan, a professor at the UC Berkeley School of Information. “When people see Timeline, they become aware that all those bits and pieces are more than the sum of the parts. They suddenly understand the bigness of their own data.”

High-Speed Materials Discovery

A new way to identify battery materials suitable for mass production could revolutionize energy storage.Wildcat starts with a wide range of precursor materials that may have potential for energy storage and other applications. Electric cars could travel farther, and smart phones could have more powerful processors and better, brighter screens, thanks to batteries based on new materials being developed by San Diego–based Wildcat Discovery Technologies.

The company is accelerating the identification of valuable energy storage materials by testing thousands of substances at a time. In March of last year, it announced a lithium cobalt phosphate cathode that boosts energy density by nearly a third over current cathodes in popular lithium-ion phosphate batteries. The company also unveiled an electrolyte additive that allows batteries to work more reliably at higher voltages.

2/ Here, some precursor materials have been refined into powder suitable for making battery electrodes.Choosing the optimal materials for batteries is a particularly tricky problem. The devices have three principal components: an anode, a cathode, and an electrolyte. Not only can each be formed from almost any blend of a huge number of compounds, but the three components have to work well together. That leaves many millions of promising combinations to explore.

3/ Cathodes, anodes, and electrolytes are assembled into small working batteries, which are tested by the thousand in this tower. Testing materials together allows dud combinations to be eliminated quickly.To hunt down winning combinations, Wildcat has adopted a strategy originally developed by drug discovery labs: high-throughput combinatorial chemistry. Instead of testing one material at a time, Wildcat methodically runs through thousands of tests in parallel, synthesizing and testing some 3,000 new material combinations a week. “We’ve got materials in the pipeline that could triple energy density,” says CEO Mark Gresser.

Others have tried the combinatorial technique to find new battery materials, but they’ve run into a stumbling block. The easy way to test thousands of materials is to deposit a sample of each one in a thin film atop a substrate. This approach did allow previous researchers to turn up promising materials for battery components—but then candidates would typically prove unsuited to cost-effective large-scale production processes.

To process so many samples, Wildcat relies heavily on automation. This assay machine weighs and records vials of materials.To avoid that time-wasting detour, Wildcat found ways to produce samples using miniaturized versions of large-scale production techniques. In effect, the candidate materials are being tested for ease of manufacturing at the same time as they’re being tested for performance. Wildcat also tests the materials wired together as actual batteries, and in a variety of potential operating conditions. “There are a lot of variables that affect battery performance, including temperature and voltage, and we examine all of them,” says Gresser. The result is that a material that performs well in a Wildcat test bed will probably perform well in field tests.

If Wildcat is successful, its efforts could lead to batteries that are smaller or more powerful than their present-day counterparts—improvements that will appeal to the makers of smart phones and electric vehicles alike.