10 Breakthrough Technologies 2008

Emerging Technologies: 2008

Each year, Technology Review selects what it believes are the 10 most important emerging technologies. The winners are chosen based on the editors’ coverage of key fields. The question that we ask is simple: is the technology likely to change the world? Some of these changes are on the largest scale possible: better biofuels, more efficient solar cells, and green concrete all aim at tackling global warming in the years ahead. Other changes will be more local and involve how we use technology: for example, 3-D screens on mobile devices, new applications for cloud computing, and social television. And new ways to implant medical electronics and develop drugs for diseases will affect us on the most intimate level of all, with the promise of making our lives healthier.

This story was part of our March/April 2008 issue.

Explore the issue10 Breakthrough Technologies

Atomic Magnetometers

John Kitching’s tiny magnetic-field sensors will take MRI where it’s never gone before.

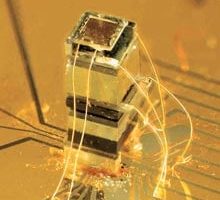

Shrinking sensors: A completed magnetometer built by NIST physicists is shown above. It consists of a small infrared laser (glued to a gold-coated plate), the cesium-filled cell, and a light detector. JIM YOST; COURTESY OF JOHN KITCHINGMagnetic fields are everywhere, from the human body to the metal in a buried land mine. Even molecules such as proteins generate their own distinctive magnetic fields. Both magnetic resonance imaging (MRI), which yields stunningly detailed images of the body, and nuclear magnetic resonance spectroscopy (NMR), which is used to study proteins and other compounds such as petroleum, rely on magnetic information. But the sensors currently used to detect these faint but significant magnetic fields all have disadvantages. Some are portable and cheap but not very sensitive; others are highly sensitive but stationary, expensive, and power-hungry.

Now John Kitching, a physicist at the National Institute of Standards and Technology in Boulder, CO, is developing tiny, low-power magnetic sensors almost as sensitive as their big, expensive counterparts. About the size of a fat grain of rice, the sensors are called atomic magnetometers. Kitching hopes that they will one day be incorporated into everything from portable MRI machines to faster and cheaper detectors for unexploded bombs.

The tiny sensors have three key components, stacked vertically on top of a silicon chip. An off-the-shelf infrared laser and an infrared photodetector sandwich a glass-and-silicon cube filled with vaporized cesium atoms. In the absence of a magnetic field, the laser light passes straight through the cesium atoms. In the presence of even very weak magnetic fields, though, the atoms’ alignment changes, allowing them to absorb an amount of light proportional to the strength of the field. This change is picked up by the photodetector. “It’s a simple configuration with extremely good sensitivity,” Kitching says.

Atomic magnetometers have been around for about 50 years; most have large, sensitive vapor cells, about the size of soda cans, made using glassblowing techniques. The most sensitive of these can detect fields on the order of a femtotesla–about one-fifty-billionth the strength of Earth’s magnetic field. Kitching’s innovation was to shrink the vapor cell to a volume of only a few cubic millimeters, decreasing power usage while keeping performance comparable.

Working with five other physicists, Kitching makes the vapor cells using micromachining techniques. They begin by using a combination of lithography and chemical etching to punch square holes three millimeters across into a silicon wafer. Then they clamp the silicon to a slip of glass and create a bond using high heat and a voltage, turning the square hole into a topless box with a glass bottom.

Inside a vacuum chamber, they use a tiny glass syringe to fill the box with vaporized cesium atoms; then they seal the box with another slip of glass at high heat. (This must be done in a vacuum because cesium reacts vigorously with water and oxygen.) Next, the physicists mount the finished vapor cell on a chip, along with the infrared laser and the photodetector. They pass a current through thin conductive films on the top and bottom of the cell to produce heat, which keeps the cesium atoms vaporized.

Kitching currently builds magnetometers a few at a time in the lab, but he has designed them with bulk manufacturing in mind. Many copies of each component are carved out simultaneously from a single silicon wafer. Several wafers, each containing multiple copies of a different component, could be layered one on top of the other. Then the stack could be sliced into multiple magnetometers.

Made in this inexpensive way, the low-power sensors could be set into portable, battery-powered imaging arrays. Such arrays could easily map out the strength and extent of magnetic fields; the more sensors in an array, the more information it can provide about an object’s location and shape. Soldiers, for example, could use such arrays to find unexploded bombs and improvised explosive devices more quickly and cheaply.

The tiny sensors could also revolutionize MRI and NMR. Both technologies rely on powerful, cumbersome, expensive magnets that require costly cooling systems. Because Kitching’s sensors can detect very weak magnetic fields, MRI and NMR machines incorporating them might be able to get good pictures using a magnet that’s much weaker–and therefore smaller and cheaper.

As a result, MRI could become more widely available. And for the first time, doctors could use it to examine patients with pacemakers or other metallic implants that can’t be exposed to powerful magnets. Portable systems might even be developed for use in ambulances or on battlefields. And NMR could move from the lab into the field, where it could help oil and mining companies assess promising underground deposits.

Kitching and his colleagues recently showed that the sensors can measure NMR signals produced by water. Much remains to be done, Kitching says, before the devices can resolve faint signals from multiple chemical structures–distinguishing, say, between several possible trace contaminants in a water sample. Likewise, portable MRI machines will take some work. But with Kitching’s miniaturized magnetometers, the challenge will shift from gathering magnetic information to interpreting it.

Cellulolytic Enzymes

Frances Arnold is designing better enzymes for making biofuels from cellulose.

Frances Arnold GREGG SEGALIn December, President Bush signed the Energy Independence and Security Act of 2007, which calls for U.S. production of renewable fuels to reach 36 billion gallons a year–nearly five times current levels–by 2022. Of that total, cellulosic biofuels derived from sources such as agricultural waste, wood chips, and prairie grasses are supposed to account for 16 billion gallons. If the mandates are met, gasoline consumption should decline significantly, reducing both greenhouse-gas emissions and imports of foreign oil.

The ambitious plan faces a significant hurdle, however: no one has yet demonstrated a cost-competitive industrial process for making cellulosic biofuels. Today, nearly all the ethanol produced in the United States is made from the starch in corn kernels, which is easily broken down into the sugars that are fermented to make fuel. Making ethanol from cheaper sources will require an efficient way to free sugar molecules packed together to form crystalline chains of cellulose, the key structural component of plants. That’s “the most expensive limiting step right now for the large-scale commercialization of [cellulosic] biofuels,” says protein engineer Frances Arnold, a professor of chemical engineering and biochemistry at Caltech.

The key to more efficiently and cheaply breaking down cellulose, Arnold and many others believe, is better enzymes. And Arnold, who has spent the last two decades designing enzymes for use in everything from drugs to stain removers, is confident that she’s well on her way to finding them.

Cellulosic biofuels have many advantages over both gasoline and corn ethanol. Burning cellulosic ethanol rather than gasoline, for instance, could cut cars’ greenhouse-gas emissions by 87 percent; corn ethanol achieves reductions of just 18 to 28 percent. And cellulose is the most abundant organic material on earth.

But whereas converting cornstarch into sugar requires a single enzyme, breaking down cellulose involves a complex array of enzymes, called cellulases, that work together. In the past, cellulases found in fungi have been recruited to do the job, but they have proved too slow and unstable. Efforts to improve their performance by combining them in new ways or tweaking their constituent amino acids have been only moderately successful. Researchers have reduced the cost of industrial cellulolytic enzymes to 20 to 50 cents per gallon of ethanol produced. But the cost will have to fall to three or four cents per gallon for cellulosic ethanol to compete with corn ethanol.

Ultimately, Arnold wants to do more than just make cheaper, more efficient enzymes for breaking down cellulose. She wants to design cellulases that can be produced by the same microörganisms that ferment sugars into biofuel. Long a goal of researchers, “superbugs” that can both metabolize cellulose and create fuel could greatly lower the cost of producing cellulosic biofuels. “If you consolidate these two steps, then you get synergies that lower the cost of the overall process,” Arnold says.

Consolidating those steps will require cellulases that work in the robust organisms used in industrial fermentation processes–such as yeast and bacteria. The cellulases will need to be stable and highly active, and they’ll have to tolerate high sugar levels and function in the presence of contaminants. Moreover, researchers will have to be able to produce the organisms in sufficient quantities. This might seem like a tall order, but over the years, Arnold has developed a number of new tools for making novel proteins. She pioneered a technique, called directed evolution, that involves creating many variations of genes that code for specific proteins. The mutated genes are inserted into microörganisms that churn out the new proteins, which are then screened for particular characteristics.

Her latest strategy is a computational approach that can rapidly identify thousands of new protein sequences for screening. This approach generates many more sequence variants than other methods do, greatly increasing the chances of creating functional molecules with useful new properties.

Arnold is using the technique to build libraries containing thousands of new cellulase genes. She and her colleagues will then screen the cellulases to see how they act as part of a mixture of enzymes. “If you test them simply by themselves, you really don’t know how they work as a group,” she says.

To fulfill her ultimate goal of a superbug able to feed on cellulose and produce biofuels, Arnold is working with James Liao, a professor of chemical engineering at the University of California, Los Angeles. Liao recently engineered E. coli that can efficiently convert sugar into butanol, a higher-energy biofuel than ethanol. Arnold hopes to be able to incorporate her new enzymes into Liao’s butanol-producing microbes. Gevo, a startup cofounded by Arnold and based in Denver, CO, has licensed Liao’s technology for use in the large-scale production of advanced biofuels, including butanol.

Overcoming cellulose’s natural resistance to being broken down is “one of the most challenging protein-engineering problems around,” says Arnold. Solving it will help determine whether low-emission biofuels will ever be a viable substitute for fossil fuels.

Connectomics

Jeff Lichtman hopes to elucidate brain development and disease with new technologies that illuminate the web of neural circuits.

BrainBows: Genetically engineering mice so that their brain cells express different combinations of fluorescent colors reveals the brain’s complicated anatomy. In the image round green neurons are interspersed with diffuse support cells called astrocytes. JEAN LIVETDisplayed on Jeff Lichtman’s computer screen in his office at Harvard University is what appears to be an elegant drawing of a tree. Thin multicolored lines snake upward in parallel, then branch out in twos and threes, their tips capped by tiny leaves. Lichtman is a neuroscientist, and the image is the first comprehensive wiring diagram of part of the mammalian nervous system. The lines denote axons, the long, hairlike extensions of nerve cells that transmit signals from one neuron to the next; the leaves are synapses, the connections that the axons make with other neurons or muscle cells.

The diagram is the fruit of an emerging field called “connectomics,” which attempts to physically map the tangle of neural circuits that collect, process, and archive information in the nervous system. Such maps could ultimately shed light on the early development of the human brain and on diseases that may be linked to faulty wiring, such as autism and schizophrenia. “The brain is essentially a computer that wires itself up during development and can rewire itself,” says Sebastian Seung, a computational neuroscientist at MIT, who is working with Lichtman. “If we have a wiring diagram of the brain, that could help us understand how it works.”

Although researchers have been studying neural connectivity for decades, existing tools don’t offer the resolution needed to reveal how the brain works. In particular, scientists haven’t been able to generate a detailed picture of the hundreds of millions of neurons in the brain, or of the connections between them.

Lichtman’s technology–developed in collaboration with Jean Livet, a former postdoc in his lab, and Joshua Sanes, director of the Center for Brain Science at Harvard–paints nerve cells in nearly 100 colors, allowing scientists to see at a glance where each axon leads. Understanding this wiring should shed light on how information is processed and transferred between different brain areas.

To create their broad palette, Lichtman and his colleagues genetically engineered mice to carry multiple copies of genes for three proteins that fluoresce in different colors–yellow, red, or cyan. The mice also carry DNA encoding an enzyme that randomly rearranges these genes so that individual nerve cells produce an arbitrary combination of the fluorescent proteins, creating a rainbow of hues. Then the researchers use fluorescence microscopy to visualize the cells.

“This will be an incredibly powerful tool,” says Elly Nedivi, a neuroscientist at MIT who is not involved in the research. “It will open up huge opportunities in terms of looking at neural connectivity.”

Lichtman and others hope that the ability to study multiple neural circuits simultaneously and in depth will provide unprecedented insight into how the wiring of the nervous system can go awry. “There’s a whole class of disorders of the nervous system that people suspect are due to defects in the connections between nerve cells, but we don’t have real tools to trace the connections,” says Lichtman. “It would be very useful to look at wiring in animal models of autism-spectrum disorders or psychiatric illness.”

In experiments so far, Lichtman’s group has used the technology to trace all the connections in a small slice of the cerebellum, the part of the brain that controls balance and movement. Other scientists have already expressed interest in using the technology to study neural connections in the retina, the cortex, and the olfactory bulb, as well as in non-neural cell types.

Generating maps of even a small chunk of the brain will be a huge challenge: the human brain consists of an estimated 100 billion neurons, with trillions of synapses. Scientists will need to find ways to store, annotate, and mine the volumes of data they create, and to meld information about connectivity with findings about the molecular and physiological characteristics of neurons in the circuits. But now, at least, they have a key tool with which to begin the massive effort of creating a wiring diagram of the brain.

Graphene Transistors

A new form of carbon being pioneered by Walter de Heer of Georgia Tech could lead to speedy, compact computer processors. MAXWELL GUBERMAN, GEORGIA TECH

MAXWELL GUBERMAN, GEORGIA TECHThe remarkable increases in computer speed over the last few decades could be approaching an end, in part because silicon is reaching its physical limits. But this past December, in a small Washington, DC, conference room packed to overflowing with an audience drawn largely from the semiconductor industry, Georgia Tech physics professor Walter de Heer described his latest work on a surprising alternative to silicon that could be far faster. The material: graphene, a seemingly unimpressive substance found in ordinary pencil lead.

Theoretical models had previously predicted that graphene, a form of carbon consisting of layers one atom thick, could be made into transistors more than a hundred times as fast as today’s silicon transistors. In his talk, de Heer reported making arrays of hundreds of graphene transistors on a single chip. Though the transistors still fall far short of the material’s ultimate promise, the arrays, which were fabricated in collaboration with MIT’s Lincoln Laboratory, offer strong evidence that graphene could be practical for future generations of electronics.

Today’s silicon-based computer processors can perform only a certain number of operations per second without overheating. But electrons move through graphene with almost no resistance, generating little heat. What’s more, graphene is itself a good thermal conductor, allowing heat to dissipate quickly. Because of these and other factors, graphene-based electronics could operate at much higher speeds. “There’s an ultimate limit to the speed of silicon–you can only go so far, and you cannot increase its speed any more,” de Heer says. Right now silicon is stuck in the gigahertz range. But with graphene, de Heer says, “I believe we can do a terahertz–a factor of a thousand over a gigahertz. And if we can go beyond, it will be very interesting.”

Besides making computers faster, graphene electronics could be useful for communications and imaging technologies that require ultrafast transistors. Indeed, graphene is likely to find its first use in high-frequency applications such as terahertz-wave imaging, which can be used to detect hidden weapons. And speed isn’t graphene’s only advantage. Silicon can’t be carved into pieces smaller than about 10 nanometers without losing its attractive electronic properties. But the basic physics of graphene remain the same–and in some ways its electronic properties actually improve–in pieces smaller than a single nanometer.

Interest in graphene was sparked by research into carbon nanotubes as potential successors to silicon. Carbon nanotubes, which are essentially sheets of graphene rolled up into cylinders, also have excellent electronic properties that could lead to ultrahigh-performance electronics. But nanotubes have to be carefully sorted and positioned in order to produce complex circuits, and good ways to do this haven’t been developed. Graphene is far easier to work with.

In fact, the devices that de Heer announced in December were carved into graphene using techniques very much like those used to manufacture silicon chips today. “That’s why industry people are looking at what we’re doing,” he says. “We can pattern graphene using basically the same methods we pattern silicon with. It doesn’t look like a science project. It looks like technology to them.”

Graphene hasn’t always looked like a promising electronic material. For one thing, it doesn’t naturally exhibit the type of switching behavior required for computing. Semiconductors such as silicon can conduct electrons in one state, but they can also be switched to a state of very low conductivity, where they’re essentially turned off. By contrast, graphene’s conductivity can be changed slightly, but it can’t be turned off. That’s okay in certain applications, such as high-frequency transistors for imaging and communications. But such transistors would be too inefficient for use in computer processors.

In 2001, however, de Heer used a computer model to show that if graphene could be fashioned into very narrow ribbons, it would begin to behave like a semiconductor. (Other researchers, he learned later, had already made similar observations.) In practice, de Heer has not yet been able to fabricate graphene ribbons narrow enough to behave as predicted. But two other methods have been shown to have similar promise: chemically modifying graphene and putting a layer of graphene on top of certain other substrates. In his presentation in Washington, de Heer described how modifying graphene ribbons with oxygen can induce semiconducting behavior. Combining these different techniques, he believes, could produce the switching behavior needed for transistors in computer processors.

Meanwhile, the promise of graphene electronics has caught the semiconductor industry’s attention. Hewlett-Packard, IBM, and Intel (which has funded de Heer’s work) have all started to investigate the use of graphene in future products.

Modeling Surprise

Combining massive quantities of data, insights into human psychology, and machine learning can help manage surprising events, says Eric Horvitz. PHOTO: BETTMAN/CORBIS; GRAPHICS: JOHN HERSEY

PHOTO: BETTMAN/CORBIS; GRAPHICS: JOHN HERSEYMuch of modern life depends on forecasts: where the next hurricane will make landfall, how the stock market will react to falling home prices, who will win the next primary. While existing computer models predict many things fairly accurately, surprises still crop up, and we probably can’t eliminate them. But Eric Horvitz, head of the Adaptive Systems and Interaction group at Microsoft Research, thinks we can at least minimize them, using a technique he calls “surprise modeling.”

Horvitz stresses that surprise modeling is not about building a technological crystal ball to predict what the stock market will do tomorrow, or what al-Qaeda might do next month. But, he says, “We think we can apply these methodologies to look at the kinds of things that have surprised us in the past and then model the kinds of things that may surprise us in the future.” The result could be enormously useful for decision makers in fields that range from health care to military strategy, politics to financial markets.

Granted, says Horvitz, it’s a far-out vision. But it’s given rise to a real-world application: SmartPhlow, a traffic-forecasting service that Horvitz’s group has been developing and testing at Microsoft since 2003.

SmartPhlow works on both desktop computers and Microsoft PocketPC devices. It depicts traffic conditions in Seattle, using a city map on which backed-up highways appear red and those with smoothly flowing traffic appear green. But that’s just the beginning. After all, Horvitz says, “most people in Seattle already know that such-and-such a highway is a bad idea in rush hour.” And a machine that constantly tells you what you already know is just irritating. So Horvitz and his team added software that alerts users only to surprises–the times when the traffic develops a bottleneck that most people wouldn’t expect, say, or when a chronic choke point becomes magically unclogged.

But how? To monitor surprises effectively, says Horvitz, the machine has to have both knowledge–a good cognitive model of what humans find surprising–and foresight: some way to predict a surprising event in time for the user to do something about it.

Horvitz’s group began with several years of data on the dynamics and status of traffic all through Seattle and added information about anything that could affect such patterns: accidents, weather, holidays, sporting events, even visits by high-profile officials. Then, he says, for dozens of sections of a given road, “we divided the day into 15-minute segments and used the data to compute a probability distribution for the traffic in each situation.”

That distribution provided a pretty good model of what knowledgeable drivers expect from the region’s traffic, he says. “So then we went back through the data looking for things that people wouldn’t expect–the places where the data shows a significant deviation from the averaged model.” The result was a large database of surprising traffic fluctuations.

Once the researchers spotted a statistical anomaly, they backtracked 30 minutes, to where the traffic seemed to be moving as expected, and ran machine-learning algorithms to find subtleties in the pattern that would allow them to predict the surprise. The algorithms are based on Bayesian modeling techniques, which calculate the probability, based on prior experience, that something will happen and allow researchers to subjectively weight the relevance of contributing events (see TR10: “Bayesian Machine Learning,” February 2004).

The resulting model works remarkably well, Horvitz says. When its parameters are set so that its false-positive rate shrinks to 5 percent, it still predicts about half of the surprises in Seattle’s traffic system. If that doesn’t sound impressive, consider that it tips drivers off to 50 percent more surprises than they would otherwise know about. Today, more than 5,000 Microsoft employees have this “surprise machine” loaded on their smart phones, and many have customized it to reflect their own preferences.

Horvitz’s group is working with Microsoft’s traffic and routing team on the possibility of commercializing aspects of SmartPhlow. And in 2005 Microsoft announced that it had licensed the core technology to Inrix of Kirkland, WA, which launched the Inrix Traffic application for Windows Mobile devices last March. The service offers traffic predictions, several minutes to five days in advance, for markets across the United States and England.

Although none of the technologies involved in SmartPhlow is entirely new, notes Daphne Koller, a probabilistic-modeling and machine-learning expert at Stanford University, their combination and application are unusual. “There has been a fair amount of work on anomaly detection in large data sets to detect things like credit card fraud or bioterrorism,” she says. But that work emphasizes the detection of present anomalies, she says, not the prediction of events that may occur in the near future. Additionally, most predictive models disregard statistical outliers; Horvitz’s specifically tracks them. The thing that makes his approach unique, though, is his focus on the human factor, Koller says: “He’s explicitly trying to model the human cognitive process.”

The question is how wide a range of human activities can be modeled this way. While the algorithms used in SmartPhlow are, of necessity, domain specific, Horvitz is convinced that the overall approach could be generalized to many other areas. He has already talked with political scientists about using surprise modeling to predict, say, unexpected conflicts. He is also optimistic that it could predict, for example, when an expert would be surprised by changes in housing prices in certain markets, in the Dow Jones Industrial Average, or in the exchange rate of a currency. It could even predict business trends. “Over the past few decades, companies have died because they didn’t foresee the rise of technologies that would lead to a major shift in the competitive landscape,” he says.

Most such applications are a long way off, Horvitz concedes. “This is a longer-term vision. But it’s very important, because it’s at the foundation of what we call wisdom: understanding what we don’t know.”

NanoRadio

Alex Zettl’s tiny radios, built from nanotubes, could improve everything from cell phones to medical diagnostics.

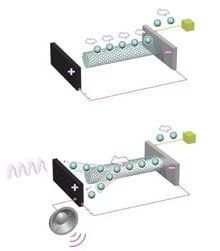

Tiny tunes: A nanoradio is a carbon nanotube anchored to an electrode, with a second electrode just beyond its free end. JOHN HERSEYIf you own a sleek iPod Nano, you’ve got nothing on Alex Zettl. The physicist at the University of California, Berkeley, and his colleagues have come up with a nanoscale radio, in which the key circuitry consists of a single carbon nanotube.

Any wireless device, from cell phones to environmental sensors, could benefit from nanoradios. Smaller electronic components, such as tuners, would reduce power consumption and extend battery life. Nanoradios could also steer wireless communications into entirely new realms, including tiny devices that navigate the bloodstream to release drugs on command.

Miniaturizing radios has been a goal ever since RCA began marketing its pocket-sized transistor radios in 1955. More recently, electronics manufacturers have made microscale radios, creating new products such as radio frequency identification (RFID) tags. About five years ago, Zettl’s group decided to try to make radios even smaller, working at the molecular scale as part of an effort to create cheap wireless environmental sensors.

Zettl’s team set out to miniaturize individual components of a radio receiver, such as the antenna and the tuner, which selects one frequency to convert into a stream of electrical pulses that get sent to a speaker. But integrating separate nanoscale components proved difficult. About a year ago, however, Zettl and his students had a eureka moment. “We realized that, by golly, one nanotube can do it all,” Zettl says. “Within a matter of days, we had a functioning radio.” The first two transmissions it received were “Layla” by Derek and the Dominos and “Good Vibrations” by the Beach Boys.

The Beach Boys song was an apt choice. Zettl’s nano receiver works by translating the electromagnetic oscillations of a radio wave into the mechanical vibrations of a nanotube, which are in turn converted into a stream of electrical pulses that reproduce the original radio signal. Zettl’s team anchored a nanotube to a metal electrode, which is wired to a battery. Just beyond the nanotube’s free end is a second metal electrode. When a voltage is applied between the electrodes, electrons flow from the battery through the first electrode and the nanotube and then jump from the nanotube’s tip across the tiny gap to the second electrode. The nanotube–now negatively charged–is able to “feel” the oscillations of a passing radio wave, which (like all electromagnetic waves) has both an electrical and a magnetic component.

Those oscillations successively attract and repel the tip of the tube, making the tube vibrate in sync with the radio wave. As the tube is vibrating, electrons continue to spray out of its tip. When the tip is farther from the second electrode, as when the tube bends to one side, fewer electrons make the jump across the gap. The fluctuating electrical signal that results reproduces the audio information encoded onto the radio wave, and it can be sent to a speaker.

The next step for Zettl and his colleagues is to make their nanoradios send out information in addition to receiving it. But Zettl says that won’t be hard, since a transmitter is essentially a receiver run in reverse.

Nano transmitters could open the door to other applications as well. For instance, Zettl suggests that nanoradios attached to tiny chemical sensors could be implanted in the blood vessels of patients with diabetes or other diseases. If the sensors detect an abnormal level of insulin or some other target compound, the transmitter could then relay the information to a detector, or perhaps even to an implanted drug reservoir that could release insulin or another therapeutic on cue. In fact, Zettl says that since his paper on the nanotube radio came out in the journal Nano Letters, he’s received several calls from researchers working on radio-based drug delivery vehicles. “It’s not just fantasy,” he says. “It’s active research going on right now.”

Offline Web Applications

Adobe’s Kevin Lynch believes that computing applications will become more powerful when they take advantage of the browser and the desktop.

Kevin Lynch TOBY BURDITTWeb-based computer programs, unlike their desktop counterparts, are always up to date and are instantly available, no matter where the user is or what operating system she’s running. That’s why cloud computing–so called because it involves software that resides in the “clouds” of the Internet–has caused a “tidal shift in how people are actually creating software,” says Kevin Lynch, chief software architect at Adobe Systems. (For a review of Nicholas Carr’s new book on cloud computing, see “The Digital Utility.”) But cloud computing has drawbacks: users give up the ability to save data to their own hard drives, to drag and drop items between applications, and to receive notifications, such as appointment reminders, when the browser window is closed.

So while many companies have rushed to send users to the clouds, Lynch and his team have been planning the return trip. With the system they’re developing, the Adobe Integrated Runtime (AIR), programmers can use Web technologies to build desktop applications that people can run online or off.

The project is rooted in Lynch’s recognition of both the benefits and the limitations of the move from desktop to Web. He envisioned hybrid applications that would allow users to take simultaneous advantage of the Internet and their own machines’ capabilities. Lynch’s team started work on the concept in 2002 and launched AIR in beta last June.

AIR is a “runtime environment,” an extra layer of software that allows the same program to run on different operating systems and hardware. (Java is another example.) With AIR, developers can use Web technologies such as HTML and Flash to write software for the desktop. Users won’t have to seek out AIR to enjoy its benefits; they’ll be prompted to download it along with the first AIR applications they want to use.

The Adobe team chose to base the system on HTML and Flash for several reasons, Lynch says. First, it makes it easy for desktop applications to swap content with websites: for example, information from a website can be pulled into an AIR application with its formatting intact. Second, it should simplify development, encouraging a broader range of applications. Programmers can easily rebuild existing Web applications to work on the desktop. And in the same way that Web-based applications can be built once and will then run on any device with a browser, an application built on AIR will run on any machine that has AIR installed. (Adobe currently offers versions for Windows and Macintosh and is developing versions for Linux and mobile devices.)

Adobe is already working with partners to demonstrate AIR’s capabilities. One example: the popular auction site eBay has released a beta AIR-based application called eBay Desktop. Designed to improve the customer’s bidding experience, the application itself retrieves and displays content about eBay auctions rather than relying on a browser. It also takes advantage of the processing power of the user’s computer to provide search tools more powerful than those on the website. For example, it can scan search results for related keywords–a process that product manager Alan Lewis says works better on the desktop because the application can store and quickly access lots of relevant information on the user’s computer. The program also uses desktop alerts to notify users when someone bids on auctions they are following. AIR enabled the company to create a customized user interface, without constraints imposed by the browser’s design and controls.

Lynch says that AIR was a response to the Web’s evolution into a more interactive medium. The browser, he notes, was created for “the Web of pages”; while developers have stretched what can be done with it, Lynch sees the need for an interface more appropriate to the Web of software that people use today. AIR, he hopes, will be just that.

Probabilistic Chips

Krishna Palem thinks a little uncertainty in chips could extend battery life in mobile devices–and maybe the duration of Moore’s Law, too.

Krishna Palem BRENT HUMPHREYSKrishna Palem is a heretic. In the world of microchips, precision and perfection have always been imperative. Every step of the fabrication process involves testing and retesting and is aimed at ensuring that every chip calculates the exact answer every time. But Palem, a professor of computing at Rice University, believes that a little error can be a good thing.

Palem has developed a way for chips to use significantly less power in exchange for a small loss of precision. His concept carries the daunting moniker “probabilistic complementary metal-oxide semiconductor technology”–PCMOS for short. Palem’s premise is that for many applications–in particular those like audio or video processing, where the final result isn’t a number–maximum precision is unnecessary. Instead, chips could be designed to produce the correct answer sometimes, but only come close the rest of the time. Because the errors would be small, so would their effects: in essence, Palem believes that in computing, close enough is often good enough.

Every calculation done by a microchip depends on its transistors’ registering either a 1 or a 0 as electrons flow through them in response to an applied voltage. But electrons move constantly, producing electrical “noise.” In order to overcome noise and ensure that their transistors register the correct values, most chips run at a relatively high voltage. Palem’s idea is to lower the operating voltage of parts of a chip–specifically, the logic circuits that calculate the least significant bits, such as the 3 in the number 21,693. The resulting decrease in signal-to-noise ratio means those circuits would occasionally arrive at the wrong answer, but engineers can calculate the probability of getting the right answer for any specific voltage. “Relaxing the probability of correctness even a little bit can produce significant savings in energy,” Palem says.

Within a few years, chips using such designs could boost battery life in mobile devices such as music players and cell phones. But in a decade or so, Palem’s ideas could have a much larger impact. By then, silicon transistors will be so small that engineers won’t be able to precisely control their behavior: the transistors will be inherently probabilistic. Palem’s techniques could then become important to the continuation of Moore’s Law, the exponential increase in transistor density–and thus in computing power–that has persisted for four decades.

When Palem began working on the idea around 2002, skepticism about the principles behind PCMOS was “pretty universal,” he says. That changed in 2006. He and his students simulated a PCMOS circuit that would be part of a chip for processing video, such as streaming video in a cell phone, and compared it with the performance of existing chips. They presented the work at a technical conference, and in a show of hands, much of the audience couldn’t discern any difference in picture quality.

Applications where the limits of human perception reduce the need for precision are perfectly suited to PCMOS designs, Palem says. In cell phones, laptop computers, and other mobile devices, graphics and sound processing consume a significant proportion of the battery power; Palem believes that PCMOS chips might increase battery life as much as tenfold without compromising the user’s experience.

PCMOS also has obvious applications in fields that employ probabilistic approaches, such as cryptography and machine learning. Algorithms used in these fields are typically designed to arrive quickly at an approximate answer. Since PCMOS chips do the same thing, they could achieve in hardware what must be done with software today–with a significant gain in both energy efficiency and speed. Palem envisions devices that use one or more PCMOS coprocessors to handle specialized tasks, such as encryption, while a traditional chip assists with other computing chores.

Palem and his team have already built and started testing a cryptography engine. They are also designing a graphics engine and a chip that people could use to adjust the power consumption and performance of their cell phones: consumers might choose high video or call quality and consume more power or choose lower quality and save the battery. Palem is discussing plans for one or more startup companies to commercialize such PCMOS chips. Companies could launch as early as next year, and products might be available in three or four years.

As silicon transistors become smaller, basic physics means they will become less reliable, says Shekhar Borkar, director of Intel’s Microprocessor Technology Lab. “So what you’re looking at is having a probability of getting the result you wanted,” he says. In addition to developing hardware designs, Palem has created a probabilistic analogue to the Boolean algebra that is at the core of computational logic circuits; it is this probabilistic logic that Borkar believes could keep Moore’s Law on track. Though he says that much work remains to be done, Borkar says Palem’s research “has a very vast applicability in any digital electronics.”

If Palem’s research plays out the way the optimists believe it will, he may have the rebel’s ultimate satisfaction: seeing his heresy become dogma.

Reality Mining

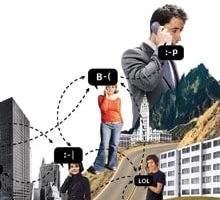

Sandy Pentland is using data gathered by cell phones to learn about human behavior. JULIEN PACAUD

JULIEN PACAUDEvery time you use your cell phone, you leave behind a few bits of information. The phone pings the nearest cell-phone towers, revealing its location. Your service provider records the duration of your call and the number dialed.

Some people are nervous about trailing digital bread crumbs behind them. Sandy Pentland, however, revels in it. In fact, the MIT professor of media arts and sciences would like to see phones collect even more information about their users, recording everything from their physical activity to their conversational cadences. With the aid of some algorithms, he posits, that information could help us identify things to do or new people to meet. It could also make devices easier to use–for instance, by automatically determining security settings. More significant, cell-phone data could shed light on workplace dynamics and on the well-being of communities. It could even help project the course of disease outbreaks and provide clues about individuals’ health. Pentland, who has been sifting data gleaned from mobile devices for a decade, calls the practice “reality mining.”

Reality mining, he says, “is all about paying attention to patterns in life and using that information to help [with] things like setting privacy patterns, sharing things with people, notifying people–basically, to help you live your life.”

Researchers have been mining data from the physical world for years, says Alex Kass, a researcher who leads reality-mining projects at Accenture, a consulting and technology services firm. Sensors in manufacturing plants tell operators when equipment is faulty, and cameras on highways monitor traffic flow. But now, he says, “reality mining is getting personal.”

Within the next few years, Pentland predicts, reality mining will become more common, thanks in part to the proliferation and increasing sophistication of cell phones. Many handheld devices now have the processing power of low-end desktop computers, and they can also collect more varied data, thanks to devices such as GPS chips that track location. And researchers such as Pentland are getting better at making sense of all that information.

To create an accurate model of a person’s social network, for example, Pentland’s team combines a phone’s call logs with information about its proximity to other people’s devices, which is continuously collected by Bluetooth sensors. With the help of factor analysis, a statistical technique commonly used in the social sciences to explain correlations among multiple variables, the team identifies patterns in the data and translates them into maps of social relationships. Such maps could be used, for instance, to accurately categorize the people in your address book as friends, family members, acquaintances, or coworkers. In turn, this information could be used to automatically establish privacy settings–for instance, allowing only your family to view your schedule. With location data added in, the phone could predict when you would be near someone in your network. In a paper published last May, Pentland and his group showed that cell-phone data enabled them to accurately model the social networks of about 100 MIT students and professors. They could also precisely predict where subjects would meet with members of their networks on any given day of the week.

This relationship information could have much broader implications. Earlier this year, Nathan Eagle, a former MIT grad student who had led the reality-mining research in Pentland’s lab, moved to the Santa Fe Institute in New Mexico. There, he plans to use cell-phone data to improve existing computational models of how diseases like SARS spread. Most epidemiology models don’t back up their predictions with detailed data on where and with whom people spend their time, Eagle says. The addition of relationship and proximity data would make these models more accurate. “What’s interesting is that you can see that a disease spreads based on who is infected first,” Eagle says.

Taking advantage of other sensors in cell phones, such as the microphone or the accelerometers built into newer devices like Apple’s iPhone, could even extend the benefits of reality mining into personal health care, Pentland says. For example, clues to diagnosing depression could be found in the way a person talks: depressed people may speak more slowly, a change that speech analysis software on a phone might recognize more readily than friends or family do. Monitoring a phone’s motion sensors might reveal slight changes in gait, which could be an early indicator of ailments such as Parkinson’s disease.

While the promise of reality mining is great, the idea of collecting so much personal information naturally raises many questions about privacy, Pentland admits. He says it’s crucial that behavior-logging technology not be forced on anyone. But legal statutes are lagging behind our data collection abilities, he says, which makes it all the more important to begin discussing how the technology will be used.

For now, though, Pentland is excited about the potential of reality mining to simplify people’s lives. “All of the devices that we have are completely ignorant of the things that matter most,” he says. “They may know all sorts of stuff about Web pages and phone numbers. But at the end of the day, we live to interact with other people. Now, with reality mining, you can see how that happens … it’s an interesting God’s-eye view.”

Wireless Power

Physicist Marin Soljacic is working toward a world of wireless electricity.

Wireless light: Marin Soljacic (top) and colleagues used magnetic resonance coupling to power a 60-watt light bulb. Tuned to the same frequency, two 60-centimeter copper coils can transmit electricity over a distance of two meters, through the air and around an obstacle (bottom). AMPS/MIT LIBRARIES (TOP); BRYAN CHRISTIE DESIGN (BOTTOM)In the late 19th century, the realization that electricity could be coaxed to light up a bulb prompted a mad dash to determine the best way to distribute it. At the head of the pack was inventor Nikola Tesla, who had a grand scheme to beam electricity around the world. Having difficulty imagining a vast infrastructure of wires extending into every city, building, and room, Tesla figured that wireless was the way to go. He drew up plans for a tower, about 57 meters tall, that he claimed would transmit power to points kilometers away, and even started to build one on Long Island. Though his team did some tests, funding ran out before the tower was completed. The promise of airborne power faded rapidly as the industrial world proved willing to wire up.

Then, a few years ago, Marin Soljačić, an assistant professor of physics at MIT, was dragged out of bed by the insistent beeping of a cell phone. “This one didn’t want to stop until you plugged it in for charging,” says Soljačić. In his exhausted state, he wished the phone would just begin charging itself as soon as it was brought into the house.

So Soljačić started searching for ways to transmit power wirelessly. Instead of pursuing a long-distance scheme like Tesla’s, he decided to look for midrange power transmission methods that could charge–or even power–portable devices such as cell phones, PDAs, and laptops. He considered using radio waves, which effectively send information through the air, but found that most of their energy would be lost in space. More-targeted methods like lasers require a clear line of sight–and could have harmful effects on anything in their way. So Soljačić sought a method that was both efficient–able to directly power receivers without dissipating energy to the surroundings–and safe.

He eventually landed on the phenomenon of resonant coupling, in which two objects tuned to the same frequency exchange energy strongly but interact only weakly with other objects. A classic example is a set of wine glasses, each filled to a different level so that it vibrates at a different sound frequency. If a singer hits a pitch that matches the frequency of one glass, the glass might absorb so much acoustic energy that it will shatter; the other glasses remain unaffected.

Soljačić found magnetic resonance a promising means of electricity transfer because magnetic fields travel freely through air yet have little effect on the environment or, at the appropriate frequencies, on living beings. Working with MIT physics professors John Joannopoulos and Peter Fisher and three students, he devised a simple setup that wirelessly powered a 60-watt light bulb.

The researchers built two resonant copper coils and hung them from the ceiling, about two meters apart. When they plugged one coil into the wall, alternating current flowed through it, creating a magnetic field. The second coil, tuned to the same frequency and hooked to a light bulb, resonated with the magnetic field, generating an electric current that lit up the bulb–even with a thin wall between the coils.

So far, the most effective setup consists of 60-centimeter copper coils and a 10-megahertz magnetic field; this transfers power over a distance of two meters with about 50 percent efficiency. The team is looking at silver and other materials to decrease coil size and boost efficiency. “While ideally it would be nice to have efficiencies at 100 percent, realistically, 70 to 80 percent could be possible for a typical application,” says Soljačić.

Other means of recharging batteries without cords are emerging. Startups such as Powercast, Fulton Innovation, and WildCharge have begun marketing adapters and pads that allow consumers to wirelessly recharge cell phones, MP3 players, and other devices at home or, in some cases, in the car. But Soljačić’s technique differs from these approaches in that it might one day enable devices to recharge automatically, without the use of pads, whenever they come within range of a wireless transmitter.

The MIT work has attracted the attention of consumer-electronics companies and the auto industry. The U.S. Department of Defense, which is funding the research, hopes it will also give soldiers a way to automatically recharge batteries. However, Soljačić remains tight-lipped about possible industry collaborations.

“In today’s battery-operated world, there are so many potential applications where this might be useful,” he says. “It’s a powerful concept.”