This AI thinks like a dog

All dog owners can testify to the powerful intelligence of their four-legged friends. Indeed, many dogs provide important services, such as guiding people who are visually impaired, finding lost individuals, or sniffing out drugs and other contraband.

These abilities are beyond even the most powerful artificial intelligence. And yet AI researchers have yet to take advantage of them in training AI systems to be more capable.

Today that changes thanks to the work of Kiana Ehsani at the University of Washington in Seattle and colleagues, who have gathered a unique data set of canine behavior and used it to train an AI system to make dog-like decisions. Their approach opens up a new area of AI research that studies the capabilities of other intelligent beings on our planet.

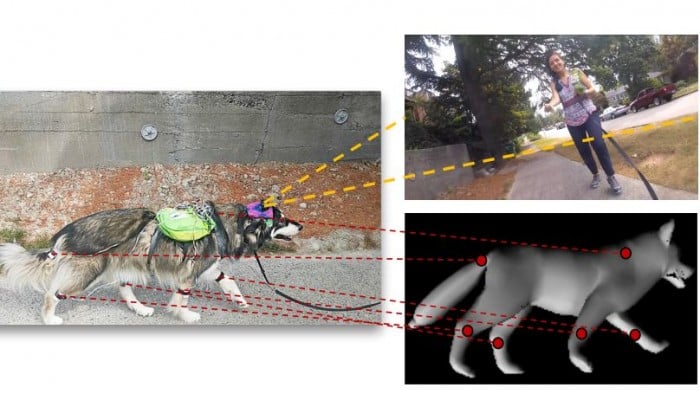

The team begin by building a database of dog behavior. They do this by kitting out a single dog with inertial measurement units on its legs, tail, and body to record their relative angle and the animal’s absolute position.

They also fitted a GoPro camera to the dog’s head to record the visual scene, sampled at a rate of five frames per second, and a microphone on the dog’s back to record sound. The data was recorded by an Arduino unit on the dog’s back.

In total, the team gathered some 24,500 video frames with synchronized body position and movement data. They used 21,000 of these frames for AI training and the rest for validation and testing of the AI system.

The team investigated how to act like a dog, how to plan like a dog, and how to learn from a dog.

In the first task, the goal was to predict the future movements of the dog given a sequence of images. The AI does this by looking at video frames and studying what the dog did next.

By learning in this way, the system becomes good at accurately predicting the next five movements after seeing a sequence of five images. “Our model correctly predicts the future dog movements by only observing the images the dog observed in the previous time steps,” say Ehsani and co.

The planning task is a little more difficult. The goal here is to find a sequence of actions that move the dog between the locations of a given pair of images. The AI again learns this by studying the actions associated with a wide range of sequential video frames.

Once again, the system performs well. “Our results show that our model outperforms these baselines in the challenging task of planning like a dog both in terms of accuracy and perplexity,” say the team.

The final task is to learn from dog behavior. One thing dogs learn is where they can and can’t walk. So the team use the database to train the AI to recognize what kinds of surfaces are walkable and to label these in new images.

That’s interesting work that shows how AI systems can match certain types of animal performance. “Our model learns from ego-centric video and movement information to act and plan like a dog would in the same situation,” they say.

Of course, there is plenty of work ahead. For example, this work gathers data from a single dog. So the team would like to study data gathered from a wide range of dogs. That would allow them to compare their behavior and understand canine visual intelligence in more detail.

But there is no reason why the approach should be limited to dogs. There would be much to learn by gathering similar data sets for monkeys, farm animals, and all kinds of animals in the wild. As Ehsani and co put it: “We hope this work paves the way towards better understanding of visual intelligence and of the other intelligent beings that inhabit our world.”

Ref: arxiv.org/abs/1803.10827 : Who Let The Dogs Out? Modeling Dog Behavior From Visual Data

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.