Baidu’s Artificial Intelligence Lab Unveils Synthetic Speech System

In the battle to apply deep-learning techniques to the real world, one company stands head and shoulders above the competition. Google’s DeepMind subsidiary has used the technique to create machines that can beat humans at video games and the ancient game of Go. And last year, Google Translate services significantly improved thanks to the behind-the-scenes introduction of deep-learning techniques.

So it’s interesting to see how other companies are racing to catch up. Today, it is the turn of Baidu, an Internet search company that is sometimes described as the Chinese equivalent of Google. In 2013, Baidu opened an artificial intelligence research lab in Silicon Valley, raising an interesting question: what has it been up to?

Now Baidu’s artificial intelligence lab has revealed its work on speech synthesis. One of the challenges in speech synthesis is to reduce the amount of fine-tuning that goes on behind the scenes. Baidu’s big breakthrough is to create a deep-learning machine that largely does away with this kind of meddling. The result is a text-to-speech system called Deep Voice that can learn to talk in just a few hours with little or no human interference.

First some background. Text-to-speech systems are familiar in the modern world in navigation apps, talking clocks, telephone answering systems, and so on. Traditionally these have been created by recording a large database of speech from a single individual and then recombining the utterances to make new phrases.

The problem with these systems is that it is difficult to switch to a new speaker or change the emphasis in their words without recording an entirely new database. So computer scientists have been working on another approach. Their goal is to synthesize speech in real time from scratch as it is required.

Last year, Google’s DeepMind made a significant breakthrough in this area. It unveiled a neural network that learns how to speak by listening to the sound waves from real speech while comparing this to a transcript of the text. After training, it was able to produce synthetic speech based on text it was given. Google DeepMind called its system WaveNet.

Baidu’s work is an improvement on WaveNet, which still requires some fine-tuning during the training process. WaveNet is also computationally demanding, so much so that it is unclear whether it could ever be used to synthesize speech in real time in the real world.

Baidu says it has overcome these problems. Its approach is relatively simple. It uses deep-learning techniques to convert text to the smallest perceptually distinct units of sound, called phenomes. It then uses a speech synthesis network to reproduce these sounds. The key difference here is that every stage of the process works by deep learning so, once trained, there is little need for human fine-tuning.

Take, for example, the word “hello.” Baidu’s system first has to work out the phenome boundaries in the following way: “(silence HH), (HH, EH), (EH, L), (L, OW), (OW, silence).” It then feeds these into a speech-synthesis system, which utters the word.

The only variables that the new system does not control are the stresses on the phonemes, their duration, and the natural frequency of the sound. This allows Baidu to change the voice of the speaker and the emotion the word conveys.

All of this is computationally demanding. The sampling rate for realistic speech is in the region of 48 kilohertz. So a computer has about 20 microseconds to generate each sample. Because the process of creating this sound involves several layers, each of these must do its job in 1.5 microseconds. To put this in context, accessing a value that resides in the main memory of a CPU can take 0.1 microseconds.

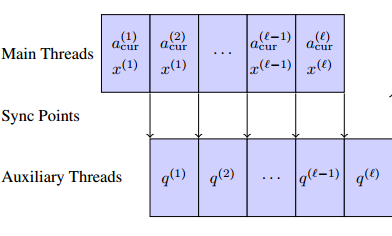

“To perform inference at real-time, we must take great care to never recompute any results, store the entire model in the processor cache (as opposed to main memory), and optimally utilize the available computational units,” say the Baidu researchers.

Nevertheless, they say that real-time speech synthesis is possible with their system and have tested it by crowdsourcing perceptions of it on Amazon’s Mechanical Turk. This involved asking a large number of listeners to rate the quality of the audio while comparing it to ground truth data in the form of an original human recording.

Baidu says the results are of high quality. “We optimize inference to faster-than-real-time speeds, showing that these techniques can be applied to generate audio in real-time in a streaming fashion,” they say.

But more significant still is the utility of the system, which can be retrained rapidly on entirely new data sets. “Our system is trainable without any human involvement, dramatically simplifying the process of creating text-to-speech systems,” says the team.

That’s interesting work which builds on Google’s efforts to make text-to-speech systems significantly better. That’s important because the decades-old dream of science fiction writers is to be able to speak to computers in real time and for them to answer back. Text-to-speech is an important part of that.

Of course, it is unlikely that Google’s DeepMind (or anybody else in the world of deep learning) has been sitting still while Baidu perfects its synthetic speech system. It will surely be just a matter of time before we see what they’ve been up to and how it compares.

Ref: arxiv.org/abs/1702.07825: Deep Voice: Real-time Neural Text-to-Speech

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.