Government Seeks High-Fidelity “Brain-Computer” Interface

Konrad Kording has seen the future of neuroscience and he finds it depressing.

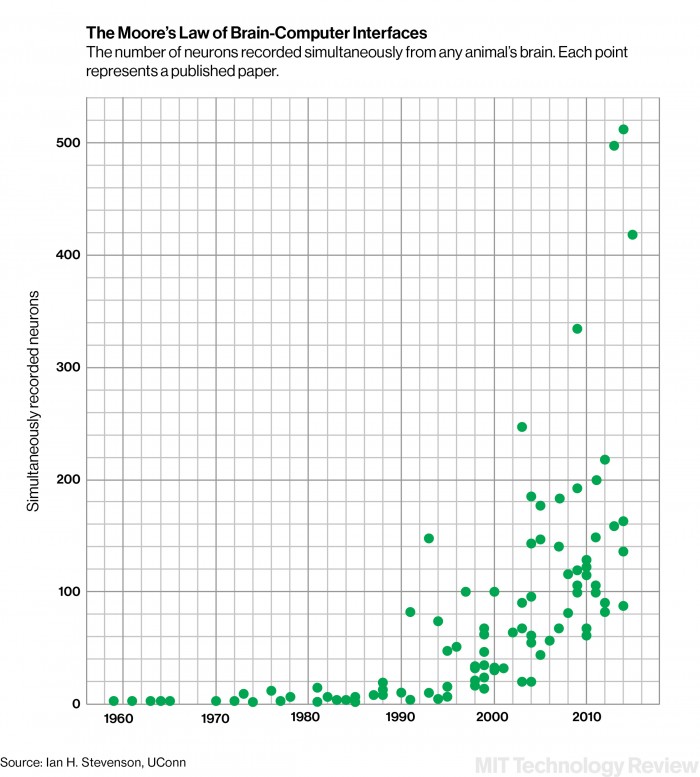

A few years back, Kording, a data scientist at Northwestern University with an interest in neuroscience, decided to examine how many neurons scientists had ever simultaneously recorded in the brain of a living animal. Recording the electrical chatter of neurons is something we’ll need to do much more of if we want to understand consciousness or develop ways to restore movement to paralyzed individuals.

The result was a 2011 Nature Neuroscience article detailing “Stevenson’s law”—so named for graduate student and first author Ian Stevenson. Similar to Moore’s law, which predicts a doubling of computing power every two years, “Stevenson’s law” also documented exponential growth in the number of neurons scientists have been able to record from at one time. Yet while everyone is “happy and impressed by Moore’s law,” Kording says that “everyone in neuroscience wants to see Stevenson’s law die.”

The reason is that each of us has roughly 80 billion neurons in our brains. Yet what the data showed is that from the 1920s, when scientists first listened in on the electrical spikes of a single neuron, they’d doubled the number only every seven years, to about 500 at once. At this pace, says Kording, “We’ll all be dead before we can record even part of a mouse brain. That’s not cool.”

This week scores of neuroscientists will head to Arlington, Virginia, to discuss how they might go about breaking Stevenson’s law. The draw is a symposium spelling out the requirements for a new Department of Defense program called “Neural Engineering System Design,” which will dole out $60 million as part of President Obama’s BRAIN Initiative. The goal: to develop technologies able to record from one million neurons simultaneously in only four years.

But that’s just a start. DARPA, the agency administering the program, also wants a device that can stimulate at least 100,000 neurons in the brain. It must be wireless, and any electronics have to fit in a package not much larger than a nickel. Finally, researchers are expected to meet the safety requirements necessary to carry out studies on human subjects, something that requires an “investigational device exemption” from the U.S. Food and Drug Administration.

Michael Roukes, a professor of physics and bioengineering at Caltech, calls the time frame “extremely aggressive.” The agency’s tendency of setting ambitious goals that aren’t always met is known as “DARPA crazy.”

“But I understand the model,” he says. “Let’s take a moon shot, right?”

The objective of the project doesn’t surprise Roukes. The stated goal of the BRAIN Initiative (see “Why Obama’s Brain-Mapping Project Matters”) is to develop ways of reading—and writing to—the large-scale ensembles of brain cells that comprise the circuits of the brain and work together to let us perceive and react to the world. To do so, it’s clear that the hardware of neuroscience needs a major upgrade.

“What’s most important is developing the technology to take a complete neural circuit—let’s say the brain of a small animal or a piece of cortex from a mouse or a human, and record from every neuron there,” says Rafael Yuste, a Columbia University neuroscientist. Yuste says most scientists still use recordings in which “people stick in these electrodes and record activity from one neuron in an animal or one patient. Just imagine you are trying to analyze an orchestra [by listening to] what a single instrument is playing.”

The current recording records are held by teams trying to develop “brain-machine interfaces” for paralyzed people, a technology that also interests DARPA. Teams at Brown University and the University of Pittsburgh, among other places, have managed to use arrays of sharp silicon needles to record from between 200 to 300 neurons at once inside the brains of volunteers. That’s enough to roughly “mind read” what arm and hand motions a person is thinking about, and use the signal to move a robotic arm (see “The Thought Experiment”) or steer a wheelchair.

“You do see robotic arms being moved today,” says Jonathan Wolpaw, an expert on brain-computer interfaces at the Wadsworth Center of the New York Department of Health. “But they’re not anything that’s anywhere near ready to be taken out of the lab. There’s no BCI that you would now want to use to control a wheelchair on the edge of a cliff or to drive in heavy traffic.”

One reason neuroscientists are certain that larger ensembles of neurons hold answers is that the average neuron spikes, at most, a couple of times per second. Yet movement relies on adjustments occurring on a time scale that is at least 10 times faster. That means any single neuron can’t possibly contain the information needed to encode the intricacies of a dance move or playing the piano. “Movement is distributed over many millions of neurons across multiple brain areas,” Kording says. “We need at least 1,000 times more neurons for an awesome prosthetic device, in my estimate.”

One strategy for how to get there is to shrink the size of electrodes so that bioengineers can cram more of them into the brain at one time. That approach is being taken at Duke University, says Mikhail A. Lebedev, a senior research scientist in a group that currently claims several neural recording records in monkeys, reading about 500 neurons at a time, which it managed by painstakingly inserting bundles of thin electrodes into a monkey’s brain.

Others think completely new approaches are needed. At the University of California, Berkeley, researchers are exploring “neural dust” consisting of microscopic free-floating sensors that could be spread around the brain. Optical techniques also hold promise. In 2013, Misha Ahrens, a neuroscientist at the Howard Hughes Medical Institute’s Janelia Farms campus, showed he could record from 100,000 neurons—virtually the entire brain of a zebrafish—by genetically modifying them to glow after firing off. Ahrens says watching so many neurons at once is already generating some surprises. “You can find areas that are pertinent to behavior where you wouldn’t look otherwise,” he says.

Ahrens’s achievement doesn’t count toward DARPA’s goal, or toward cracking Stevenson’s law, because his method doesn’t catch the neurons’ electrical spikes exactly when they occur. That is because the glowing molecules are set off by changes in calcium concentrations that occur inside a cell only after it fires off. Roukes, the Caltech scientist, says researchers are already working to develop fluorescent molecules that respond directly to voltage changes.

A different problem is that unlike a zebrafish, which is basically translucent, the human brain has a milky consistency that is hard to see through. To deal with that, Roukes says, it may be possible to slide ultra-narrow silicon shanks throughout the brain. These would contain the hardware necessary to both emit light and detect it from nearby cells, getting around the problem of the brain’s opacity. If enough of these pillars were used, the entire brain could be illuminated, Roukes calculates.

Although optical techniques are promising, the FDA could be reluctant to let scientists genetically modify volunteers’ brains so that they glow. Roukes says that as a result, he and his collaborators plan to present DARPA with a more conventional proposal that still relies on more conventional electrodes.

DARPA has reasons for insisting any recording scheme be tried in humans. The agency hopes the goal will draw interest from medical device companies as well as manufacturers of semiconductors and optical instruments. Without industry involvement, there’s little chance of making brain-machine interfaces improve as fast as computers have.

“By trying to push this into humans, they may be able to get some technological elements that haven’t been used in the animal experiments because there hasn’t been as much of an economic driver for it,” says Adam Marblestone, a neuroscientist at MIT. He hopes to see some “really serious engineering” that’s been missing from academic experiments.

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.